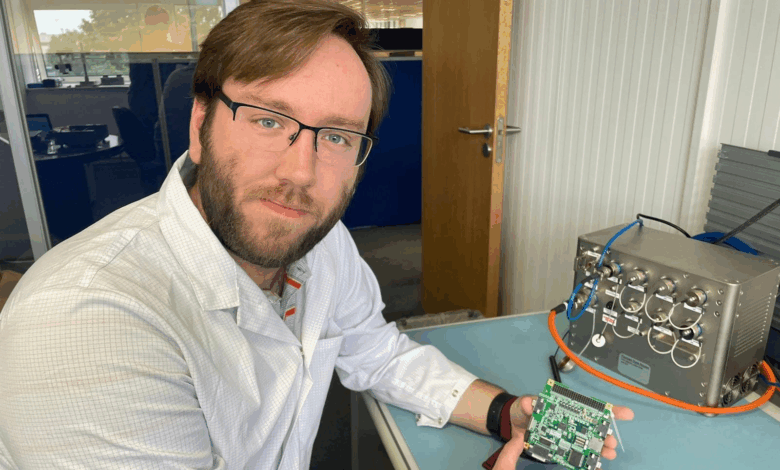

James F. Murphy is an aerospace AI researcher and engineer who specializes in developing embedded machine learning systems for spacecraft. Currently working at Réaltra Space Systems and a graduate of University College Dublin, James has contributed to one of Ireland’s most groundbreaking space projects: EIRSAT-1, the country’s first satellite mission. His work focuses on anomaly detection—helping satellites recognize and respond to system faults in real time, without relying on constant ground support.

In this interview, James explains how onboard AI can catch problems before they escalate, why unsupervised learning is key for space missions, and what it takes to run powerful models on tiny, power-constrained satellites. For anyone new to space tech, this conversation offers an inside look at how AI is reshaping the future of satellite operations and autonomous spaceflight.

James, for those of us new to the topic, what does “anomaly detection” mean in the context of space missions and why is it so important for satellites?

In space missions, anomaly detection means identifying when a satellite behaves in an unexpected or abnormal way, such as unusual temperature, voltage, or system activity. This is crucial because once a satellite is in orbit, there’s no way to physically repair it, and real-time communication is limited. For the smallest satellites, usually less than 10kg, which have tight power and data constraints, even minor issues can escalate quickly. Anomaly detection helps catch these early, allowing the satellite to recover automatically or alert ground teams before the situation worsens. At Réaltra, I developed AI models that are designed to run directly on these satellites and to directly monitor this telemetry for any deviations. These models are optimized to work on limited hardware and provide real-time fault monitoring without needing constant contact with Earth. This increases the satellite’s resilience and helps ensure mission success.

You’ve worked on AI systems that help satellites spot subtle issues before they turn into failures. Can you explain how that works in plain terms?

Satellites constantly send back data about what is happening on-board, such as temperatures, voltages, and how much power they’re using. Normally, this data follows a predictable pattern. What I’ve done is build an ML system that learns what “normal” looks like by studying this data over time. Then, when the satellite is in flight, the ML model keeps checking new data against what it expects. If something starts to drift, the system can spot it early and flag it as a potential problem. This gives ground teams a chance to fix the issue before it causes a failure. What makes this powerful is that it all runs on the satellite itself, in real time, without needing constant interactions from Earth. Our current goal is to use this as an aid to the ground segment as the trustworthiness still needs to be improved before satellite operators would give control over to the model to make those decisions itself.

Your research involved both supervised and unsupervised machine learning. What’s the difference between the two, and how do they each help satellites catch problems?

Supervised learning means training a model using examples where the outcome is already known, in this case, you’d need labelled data showing what normal and faulty behavior looks like. The problem is, with satellites, you rarely have enough examples of failures to train a model properly, and by definition, anomalies are unexpected. That’s where unsupervised learning comes in. These models don’t need labelled examples of anomalies. Instead, they learn what “normal” looks like from regular data, and then flag anything that doesn’t fit that pattern. I focused on unsupervised techniques because they’re better suited to detecting unknown or rare issues. Supervised methods can give high accuracy if you already know what to look for, but in space, we’re often trying to catch the unexpected, and that’s exactly what unsupervised learning is good at.

You were part of Ireland’s first satellite mission, EIRSAT-1. What role did AI play in that project, and what did you learn from it?

While I wasn’t directly involved in building or flying EIRSAT-1, I worked alongside the mission team through a partnership between Réaltra and University College Dublin. Our goal was to explore how AI could enhance satellite reliability, specifically through anomaly detection. We collaborated to develop and publish a dataset based on simulated and real flight EIRSAT-1 telemetry, which could serve as a baseline for training machine learning models to detect anomalies. This kind of dataset is rare in the space industry as nobody wants to admit when their satellite suffers a problem, so creating one was a key step in advancing AI-based fault detection for space missions. From the project, I learned the importance of domain-specific data and the practical challenges of building models that must work within tight hardware and power constraints.

How do you build AI tools that work on tiny, power-limited satellites in space—far from the cloud or big computing systems?

Building AI for tiny, power-limited satellites means you have to rethink everything. In our case, we focused on building the models from the ground up. You only have a small onboard computer with tight limits on power and memory. In some missions you might be lucky and have access to an Edge AI board, but this is not always guaranteed. Instead of forcing complex models into limited hardware, I focused on designing lightweight models that still deliver good results. Since satellite telemetry is time series data, we can get away with much smaller models when compared to computer vision applications. I also found that careful preprocessing of the data, like normalizing trends and reducing noise, made a huge difference. In the end, even relatively simple anomaly detection models performed surprisingly well when the input was clean and well-processed.

Most satellites today still use basic logic systems. What does AI do differently, and how close are we to having smarter, more autonomous satellites?

AI brings flexibility and autonomy by allowing satellites to interpret data in context, adapt to changing conditions, and make smarter decisions onboard. For example, in Earth observation, AI can identify cloudy images in real time, deciding what data to downlink instead of sending everything, thereby only sending the useful information. In anomaly detection, AI can learn normal system behavior and flag subtle faults before they become mission failures, even when the issue wasn’t seen before. And in autonomous navigation, AI can help satellites react to unexpected events, optimize their attitude control, or avoid collisions without needing immediate ground control input. While we’re not fully there yet, the trend is clear. Satellite operators used to manage a few spacecraft, now, with megaconstellations like Starlink and Kuiper aiming for tens of thousands, manual control doesn’t scale. Add to that the challenges of deep space missions, where even communicating with the Moon involves several seconds of delay, and real-time control becomes impossible. For missions to Mars and beyond, autonomy is a mission requirement. That’s why I believe AI is essential to the future of space exploration.

What was it like testing your models using real satellite data and hardware-in-the-loop simulations? How close did that feel to the real thing?

With the EIRSAT-1 dataset, it was the real thing. While we initially trained and validated our models using ground test data, the real breakthrough came when we deployed them on actual flight data from the first three months of EIRSAT-1’s mission. That meant our models were being tested against the same telemetry the satellite experienced in orbit, including real variations, environmental effects, and operational quirks you just can’t simulate perfectly. To make the testing even more representative, we used hardware-in-the-loop (HIL) setups that ran the models on embedded systems, similar in capability to what would fly on a CubeSat. Combined with the EIRSAT-1 telemetry, this gave us a highly accurate environment to assess performance under real constraints. This end to end system is the closest we can get to flying our model on EIRSAT-1 without actually flying it.

Looking ahead, how do you see AI transforming space exploration? Will future missions rely more on AI to keep spacecraft safe and smart?

As missions become more complex, distant, and data-rich, traditional methods of control and monitoring simply won’t scale. AI offers a way to make spacecraft smarter, more autonomous, and better able to handle the unexpected. In the near term, we’ll see AI used for onboard fault detection, real-time data prioritization, and autonomous navigation, reducing reliance on constant ground support. Over time, AI will enable spacecraft to make decisions independently, adapt to changing environments, and even coordinate with other spacecraft in swarms or constellations. For deep space missions, where communication delays make real-time control impossible, AI will be essential for survival and mission success. Whether it’s exploring distant planets or managing thousands of satellites in orbit, I believe we will see the adoption of AI increase exponentially in the space industry similar to how terrestrial applications have exploded in the last few years thanks to the likes of LLMs.