Insider risks, or the potential of harm to an organization coming from the action or inaction of an insider, have become a significant challenge for businesses and government agencies alike. The conditions for organizations to be the victim of an insider risk have grown sharply during the past few years, and they are continuing to expand. One recent report found that 83 percent of organizations reported at least one insider attack in 2024, up from 60 percent of organizations in 2023. Nearly half (48 percent) of organizations reported that insider attacks became more frequent from 2023 to 2024.

This dramatic increase can be attributable to various factors, one being the evolving workplace. The growth of remote and hybrid workers has greatly increased insider risk by expanding the potential attack surface. With more employees working away from closely monitored on-premises environments, often using unprotected private devices on unsafe public connections, proper security protocols can be overlooked, and fewer barriers exist to restrict risky behavior.

Also, the current external threat landscape is no match for the average worker. The sophistication and scale of threats has grown by leaps and bounds. Today, these actors are regularly carrying out successful phishing, spear phishing, and whaling attacks using convincing deepfakes, hyper-targeted AI-driven communications, and social engineering tactics to prey on workers who let their guard down.

Successfully mitigating insider risks that can arise from these and other factors requires more than simply detecting and remedying an event. Effective insider risk management solutions proactively identify the precursors of an event and provide the right level of visibility to mitigate the risk before any unauthorized action becomes a threat to the organization. But proactively monitoring and analyzing the many and varied factors that can contribute to insider risk, particularly among large, dispersed enterprises, is a daunting task for any internal risk management team. Fortunately, Artificial Intelligence (AI) is a powerful tool that can be leveraged for faster, more thorough insider risk analysis.

AI as a Powerful Risk Management Tool

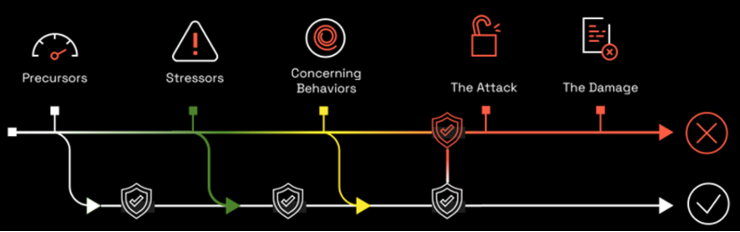

Effective, proactive insider risk management evolves across different stages to prevent an insider event and its resulting damage in what is referred to as the Critical Pathway for Insider Risk (CPIR) model. These stages are:

Predisposing Factors: Personal characteristics or vulnerabilities that can make an individual more susceptible to insider risk.

Stressors: External events or situations, such as job dissatisfaction or personal financial problems, that can put pressure on an individual and exacerbate their pre-dispositions.

Concerning Behaviors: Observable actions, such as decreased productivity or unauthorized access to sensitive information, that may indicate an increased risk of insider threat.

Organizational Response: The organization’s actions concerning employee behaviors. A poor response can escalate the situation and increase the likelihood of an insider incident.

Critical Pathway for Insider Risk (CPIR) Management Model, Dr. Eric Shaw, 2015

AI can play an integral role at each stage to consolidate and analyze user information and behavioral indicators and inform an ideal organizational response to an insider risk. For example, AI can correlate numerous precursor and stressor risk indicators with behavioral data across different departments such as Human Resources (performance reviews, compensation and other publicly available employee information), IT (web visits and network access) and physical security systems (facilities access) to more efficiently expose a potential risk.

Organizations implementing AI for insider risk management must ensure that monitoring practices are transparent, legally justified, and respectful of employee privacy. This includes providing appropriate notice, maintaining human oversight, and complying with data protection and employment laws such as GDPR, CPRA, and ECPA.

But AI’s benefits can go much deeper and be more revealing. AI makes possible linguistic analysis that inspects subjective information contained in employee emails, chat logs, and other communications for troubling content that gives indication of dissatisfaction or potential threatening behavior. This could expose a high level of employee disgruntlement, which could manifest into an increased risk not just to an organization’s secure information – it could also reveal an intention for one employee to harm another. Such analysis and early detection enables organizations to address employee wellness early with the opportunity to intervene and potentially disrupt those who may be on a critical pathway to insider risk.

AI enables deep data analytics of vast quantities of risk indicators and customization of metrics by an organization to efficiently and effectively identify anomalous behavior associated with malicious and non-malicious intentions from insiders. Having the ability to ingest user activity monitoring data spanning applications, file movement, keywords, devices, network access, web browsers, email, etc., is critical for compiling early warning indicators. AI combined with user attributable data is a proven technique for organizations to quickly assess potential threats and make proactive and informed decisions.

Important Considerations for AI-Enabled Insider Risk Data

While AI can vastly improve an organization’s insider risk management program, there are some important governance considerations to keep in mind. First, AI-enabled insider risk tools draw upon huge amounts of data to function most accurately, and that data is, by its nature, employee-centric. That means all insider risk monitoring that leverages AI should respect the personal privacy and civil liberties of employees. Insider risk management teams need to ensure that proper safeguards are always in place so that AI-enabled tools only collect appropriate, insightful, and relevant user data that is necessary for the organization’s information security and workforce protection.

With the emergence of AI-enabled tools that improve the effectiveness of an analyst in their daily responsibilities (making it easier for them to do more with less), it is important to realize that not all employee behavior is malicious. Malicious action is intentional and often driven by a desire for personal gain or vengeance from an insider working alone or collaborating with outside fraudsters to steal from or sabotage organizational systems or fellow employees. Using technology responsibly with a human in the loop is necessary to truly determine if an employee is malicious or the data reveals an employee who is simply negligent, and that is a very different situation that requires a different organizational response. Negligence is usually accidental, resulting from convenience or incompetence on the part of an employee who’s possibly trying to follow policy but lacks the training to properly perform a function.

When utilized properly, AI enhances both insider risk data collection and insider threat analysis. Such insider risk programs don’t just give analysts insight into what happened but also why it may have happened. By understanding the root cause (new security process, HR policy, etc.), an insider risk program can provide real business value to the organization through a reduction in the meantime to investigate and remediate an employee or organization behavior. Insider risk should be considered a key security and business contributor to the overall success of the organization.

Conclusion

The evolving workplace and continually advancing sophistication of external threat actors makes it more and more difficult for organizations to defend against insider risks. Bad actors don’t always look like bad actors. Sometimes, the good actors look like bad actors, and sometimes, identity obfuscation is by design. That is why smart teams are leveraging AI-enabled techniques with essential collection tools to gain insight and explore information to successfully manage and avert internal risks.

Securing the trustworthiness of your workforce should always be the goal of any insider risk management program. Mature, AI-assisted insider risk programs do much more than that. They exist to enable organizations to become more efficient, streamline processes, and provide a mechanism for greater collaboration. Often, that means not finding the “bad actor,” but instead proving that one doesn’t exist.