Abstract

In digital advertising, brand safety depends on more than just the ad—it also depends on where the ad appears. Billions of placements happen in milliseconds, and even the right message can cause harm if shown in the wrong context. In this article, Paras Choudhary shares how his team built an AI system that evaluates both ad creatives and surrounding content in real time, combining automation with human judgment to make brand-safe decisions at scale.

You’ve spent months building a campaign that fits your brand perfectly. The campaign’s been fine-tuned to reflect your brand perfectly—until it lands in the wrong place. Suddenly, everything you planned feels misaligned, and it happens faster than anyone can react.

This happens more often than teams expect. In digital advertising, placements happen in milliseconds. Creatives are delivered across apps, videos, and websites with little control over the surrounding context.

When a publisher requests an ad, it sends limited metadata about the content. The ad server must respond instantly, selecting a creative that fits both the brand and the environment. That decision depends on smart inference and strong safeguards.

To solve this, our team built a system that evaluates both the ad and its placement context in real time. This article shares how it works and what it has delivered so far.

The Problem: More Content, Less Time

Source: Shutterstock

Our platform handles millions of ad creatives each day, including videos, banners, and dynamic assets. At the same time, billions of ad requests come in as new content is constantly added across apps, websites, and smart TVs. Each interaction creates a fresh ad opportunity, with its own policies, audiences, and brand safety considerations.

Manual review isn’t feasible at this scale. Even compliant creatives may need changes based on region, language, or context. For video placements, it’s important to analyze what comes before and after the ad break to avoid unsafe or jarring transitions. Competitive conflicts also matter, rival brands like Pepsi and Coke should never appear in the same sequence.

The scale of this problem keeps growing. To get a clearer view of why faster, smarter systems are necessary, here’s a snapshot of recent enforcement activity from a major digital advertising platform:

Google Ads Enforcement Overview (2022–2023)

Source: Google Ads Safety Reports 2022 & Google Ads Safety Reports 2023

| Metric | 2022 | 2023 | Change |

| Ads removed | 5.2 billion | 5.5 billion | +300 million |

| Advertiser accounts suspended | 6.7 million | 12.7 million | Nearly doubled |

| Publisher pages restricted or blocked | ~2 billion | 2.1 billion | Slight increase |

| Publisher websites with site-level action | ~280,000 | 395,000+ | Significant increase |

| % of enforcement by AI/ML models | Not disclosed | Over 90% | — |

These figures show how much content still requires review, even with safeguards in place. The rise in enforcement also signals that automated systems now handle most of the load, with over 90% of page-level decisions powered by machine learning.

Still, issues slip through. In 2023, even major advertisers like Procter & Gamble and Microsoft had ads placed alongside unsafe content, despite using industry-standard verification providers.

NewsGuard also identified over 140 brands that inadvertently served ads on low-quality, AI-generated websites, many created using tools like ChatGPT. These sites often produce unreliable content and exploit programmatic ad systems, placing brand messages in contexts no one intended or approved.

With this environment in mind, we needed to build a system that could evaluate creative content quickly, adapt to the context, and make the right call before the ad is ever shown.

How the System Works

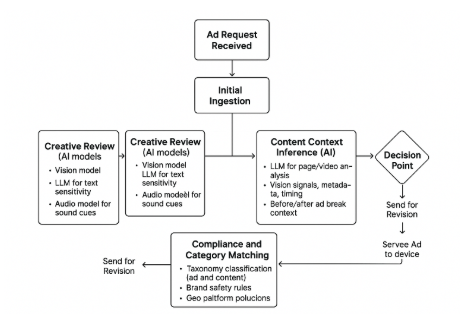

Figure 1. Ad Review and Delivery Flow with AI-Powered Creative and Context Decisioning

The system reviews ad creatives and the surrounding content simultaneously, making policy decisions in just a few milliseconds. Every ad opportunity must be evaluated not only for the safety and compliance of the creative itself, but also for its alignment with the content it will appear alongside. The following flow reflects how that happens across billions of ad requests daily.

1. Ad Request Received

When a publisher’s content reaches an ad slot, it sends a request to the platform. The request includes basic metadata such as page URL, device type, content category, and time of day.

However, this metadata is often limited. It may not reflect the full meaning, tone, or sequence of the content. For example, in a video, it does not describe what appears just before or after the ad break. The system must infer much of this context on its own.

2. Initial Ingestion

The system receives the creative and ad request together. It extracts asset components including text, images, audio, and video.

Metadata is parsed and compared against past violations or known risk factors. The system then assigns a processing path based on factors like geography, format, advertiser profile, and regulatory requirements. Creatives that meet basic safety standards may be fast-tracked. Others move into full evaluation.

3. Creative and Content Analysis

Two processes run in parallel.

In the creative review, AI models evaluate the ad using different modalities:

- Vision models scan for risky visual cues such as nudity or weapons

- Language models assess textual and spoken content for hate speech, political references, or misleading claims

- Audio models detect inappropriate sounds, tone, or profanity

At the same time, the system analyzes the publisher’s content. It uses natural language processing, image signals, and metadata cues to infer the tone, sentiment, and subject matter of the content. In video placements, it checks the content immediately before and after the ad break. This allows the system to recognize when a creative might be technically safe, but still contextually inappropriate.

4. Compliance and Category Matching

Both the ad and the content are categorized using a shared taxonomy. The system applies multiple checks at this stage:

- Competitive separation, to ensure rival brands do not appear in the same session

- Regional and cultural rules, such as avoiding adult content during children’s viewing hours

- Advertiser-specific settings, such as restrictions on alcohol or political messaging

- Platform-level policy enforcement based on format, geography, or device

This ensures that the final match between creative and content is appropriate on all fronts.

5. Decision Point

Based on all analyses, the system makes one of three decisions:

- Approve the creative for delivery

- Modify the creative by swapping frames or applying alternate assets

- Return the creative to the advertiser or creative provider for revision, with a clear reason for the block

6. Ad Delivery

If approved, the creative is passed to the exchange or bidding layer. One bidder wins the slot. The selected ad is served to the user’s device in the context that has been verified as safe, compliant, and brand-aligned.

Each decision is logged for transparency, reporting, and future model improvement. This ensures that safety, relevance, and speed are maintained even under high-scale and fragmented content environments.

Human Review

Edge, cases such as satire, cultural nuance, or borderline visuals, are handled by trained reviewers with escalation paths.

As Alex Popken, VP at WebPurify and former Twitter Trust & Safety executive, said:

We’ve built our system with that balance in mind: automated where possible, human where it matters.

Understanding Publisher Context for Ad Decisioning

Brand safety depends on knowing where an ad will appear. Publishers send metadata such as URL, category, and language through the API, but this information is often incomplete. Our system fills in the gaps using LLMs and vision models to infer tone, visuals, and semantic context.

The content is assigned a unique ID and mapped to a standardized taxonomy used for ad matching. The ad server then selects the most suitable creative from multiple bidders, based on bid value, safety alignment, and content fit.

This ensures ads are placed quickly and in the right environment.

A Typical Scenario

If a brand submits a video ad with a frame showing a model applying lotion near the neckline, the system may flag it based on visual patterns. While the content classifier finds no policy violation, the context model detects that the placement falls during family viewing hours in a region with stricter norms.

Rather than block the ad, the system recommends a pre-approved frame swap. The new version is re-checked instantly, and if cleared, proceeds to delivery. The entire cycle happens in under a second, with no impact on the campaign timeline.

Keeping Up with Global Compliance

Source: Shutterstock

We added a compliance layer that adjusts reviews by geography, platform, and advertiser settings, ensuring the right rules apply in each context. Dashboards show advertisers what was flagged, why it happened, and what action was taken, making the process more transparent and helping teams improve future submissions.

The wider industry is moving in the same direction. Helen Mussard, Chief Marketing Officer at IAB Europe, noted, “The poll results highlight how seriously the digital advertising industry takes the safety of brand advertising investments and how improvements have been made in tackling this over the past couple of years.” We’ve seen the same shift. Brand safety is no longer a background task. It is now a shared priority across platforms, partners, and advertisers.

What We’ve Seen So Far

Since launch, the system has delivered clear improvements:

- Fewer policy violations and faster creative approvals

- Reduced user complaints about ad placement

- Better guidance for advertisers on building compliant creatives

It’s also improved how content is interpreted and aligned:

- Accurate categorization at scale, across formats and regions

- Context-aware classification that captures nuance

- Fuzzy matching with ~95% accuracy for near-duplicate content

- Cleaner ad-to-environment alignment, with less need for manual review

Brand safety is not just about blocking risk, but about delivering the right content in the right context. Our system combines speed, precision, and adaptability to make decisions in milliseconds, grounded in real-world context.

As the digital landscape evolves, this foundation allows us to stay ahead, protecting brands, respecting audiences, and maintaining trust at scale.

Reference

- Google (2023). Our 2022 Ads Safety Report. blog.google. https://blog.google/products/ads-commerce/our-2022-ads-safety-report/

- Google (2024). Google Ads Safety Report 2023. blog.google. https://blog.google/products/ads-commerce/google-ads-safety-report-2023/

- 2045 (2024). Brand Safety Under Fire: Insights from the Analytics 2024 Study. 2045.co. https://www.2045.co/post/brand-safety-under-fire-insights-from-the-adalytics-2024-study

- Fanatical Futurist (2023). Junk websites filled with AI-generated content are making millions from programmatic ads. fanaticalfuturist.com. https://www.fanaticalfuturist.com/2023/07/junk-websites-filled-with-ai-generated-content-are-making-millions-from-programmatic-ads/

- Associated Press (2023). Alex Popken on AI, content moderation, and human oversight. apnews.com. https://apnews.com/article/alex-popken-webpurify-twitter-ai-content-moderation-28e540b1021d6bb7ecd5fe7584db2976

- ExchangeWire (2023). IAB Europe announces results of its 2023 Brand Safety Poll. exchangewire.com. https://www.exchangewire.com/blog/2023/03/15/iab-europe-announces-results-of-its-2023-brand-safety-poll/

About the Author

Paras Choudhary is a senior manager of technical program management with experience building large-scale software systems across advertising and consumer technology. He currently leads programs focused on AI-driven brand safety and ad content review at a major U.S. tech company. Paras holds an integrated Master’s degree in Electrical Engineering from IIT Bombay and has led initiatives across pricing automation, marketing tools, and platform compliance.