Artificial intelligence now sits in many daily workflows. Employee views on it feel clear when leaders take time to listen. Many want help with routine work, clear guardrails, and fair credit for results. They also want to know who sees their data, how decisions get made, and what skills they should build next. Platforms like Thrivea help teams gather that feedback and turn it into action.

What employees value in AI

People value time back for focused work. They prefer tools that draft, sort, and summarise. They want support for research, scheduling, and basic reporting. They expect human review at key points. They ask for clear labels when AI writes or recommends. They also ask for easy ways to correct outputs.

Employees want AI that fits into the apps they already use. They want steps that feel simple and safe. They look for a clear owner for each process. They want the right to raise flags when results look off. They want training that uses their own tasks, not generic demos.

Where worries come from

Concerns rise when AI feels hidden. People do not like unclear monitoring. They do not like models trained on private work without consent. They reject vague language on data retention. They do not want blind trust in automated ratings or hiring screens. They worry when error handling lacks a path to remedy.

Some roles face fear of deskilling. Others fear more work, not less. Support teams may fear response scripts that erase their voice. Creative teams may fear output that looks the same each time. These signals point to one need, trust built through clarity and choice.

How teams build trust

Trust grows when leaders explain purpose, scope, and limits. Start with a use case that solves a real pain. Show the current process and the target process. State what data the tool will use and why. Map who can see the outputs. Set human checkpoints. Write down how to roll back a change.

Involve employees in design sessions. Ask them to note edge cases. Invite them to write the first version of prompts and guidelines. Form a small review group to test risky steps. Publish a plain language policy. Update it on a set cadence. Give people a way to opt out in high risk areas when possible.

Skills employees want

Workers ask for prompt writing skills, error spotting, and source review. They want to learn basic model limits and common failure modes. They ask for judgment rules, for example when to escalate to a human. They want practice with real files, real tickets, and real briefs.

Managers need coaching on role design. They should know which tasks fit AI support and which tasks need human lead. They should set goals by outcomes, not tool use. They should mentor on credit and authorship. When AI helps, give credit to the human who steered the work.

How leaders measure impact

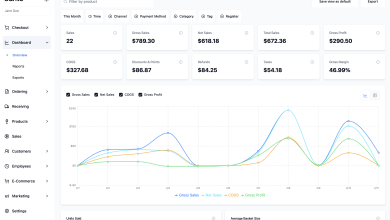

Leaders need a small set of clear metrics. Track time saved on known tasks. Track quality, using peer review or customer ratings. Track error rates and fix times. Track employee sentiment by team and by role. Watch for shifts in after hours work. Look at adoption by choice, not by mandate.

Use a baseline, then compare to a pilot group. Keep the evaluation window short. Share the results in simple charts. Explain what will change next. Close the loop with the teams that took part. When goals are not met, pause and improve the workflow.

Guardrails that keep people safe

Set rules for data handling that people can read in one page. Limit access based on role. Redact sensitive fields. Store prompts and outputs with timestamps. Log human approvals. Add watermarking to AI generated text where it matters. Make an issue form easy to find.

Write clear rules on performance use. Do not rate employees on AI output alone. Do not use shadow monitoring. If a tool observes work, say so in clear terms. Explain how the data will support growth, not punishment. Include unions or employee councils when relevant.

Practical steps for your workplace

Pick one workflow with a clear pain, like support email triage or meeting notes. Map the steps with the team that owns the work. Define the input, the output, and the handoff. Choose a tool that slots into the current stack. Run a small pilot with a fixed end date. Train with the team’s own examples. Provide a single contact for help.

After the pilot, hold a debrief. Capture wins, misses, and edge cases. Decide what to keep, change, or stop. Publish the decision and the next steps. Expand to a second use case only when the first one holds steady.

Closing thoughts

Employees see AI as a tool that can remove friction from daily work. They want clarity on data, credit, and oversight. They ask leaders to involve them early and treat the rollout as shared work. When leaders listen and set clear rules, trust grows and results improve.