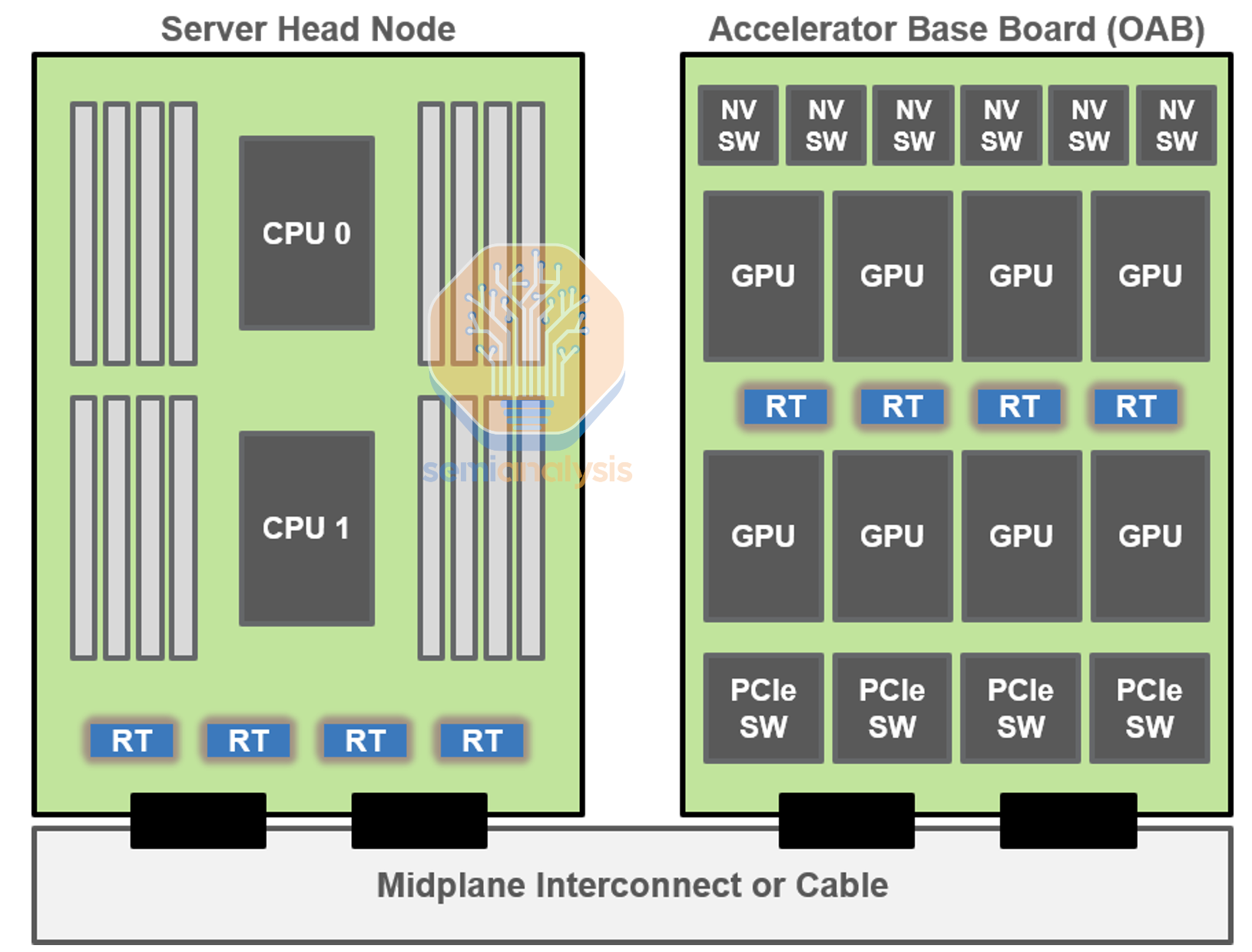

In the relentless race for Al supremacy, the insatiable hunger of GPUs for power has become a defining challenge. As Al models grow larger and more complex, so does the energy consumption required to train and run them. But while the spotlight fixates on the high-wattage GPUs, a silent energy drain is often overlooked: the head node. This critical but often over-provisioned component, which manages the entire Al system, can waste significant power when the GPUs are idle. A new paradigm is emerging, however, with the rise of the low-power, intelligent head node, which promises to revolutionize the energy efficiency of modern Al platforms.

The Problem with the Old Brain

For years, the standard approach was simple: build the most powerful system possible. This meant pairing a high-performance head node-often a general-purpose, x86-based server-with banks of powerful GPUs. The logic was that a beefy head node was necessary to handle the demanding preprocessing and task scheduling for the GPUs. But this approach is fundamentally inefficient. In many Al workflows, GPUs are not utilized 100% of the time. They sit idle during data loading, pre-processing, and the final stages of a task. During these idle periods, the powerful head node continues to consume a significant amount of electricity, creating a hidden energy tax. This is a particularly wasteful problem for servers that handle fluctuating workloads or for smaller, on-device Al applications where every milliwatt matters.

The Low-Power Brain: A Smarter Approach

An optimized, low-power head node tackles this problem head-on by using a combination of energy-efficient hardware and intelligent software.

- Minimizing GPU Idle Time: The core of the issue is underutilization. A low-power head node works with advanced job scheduling and orchestration software to maximize the utilization of expensive, high-wattage GPUs. When a GPU finishes a task, the intelligent head node quickly reassigns it to the next available job in the queue or, if idle, puts it into a low-power state. This improves overall system throughput while dramatically reducing energy waste. The difference can be staggering, essentially cutting power consumption in half during idle periods by preventing the GPUs from needlessly draining power.

- Offloading Non-Critical Tasks: Not every task requires a high-performance CPU. An optimized head node can offload specific functions, such as data management or basic system monitoring, to less energy-intensive components. This frees up the main CPU for critical tasks and further reduces overall power draw.

- Intelligent Power Management: Modern, optimized head nodes use advanced power management features, allowing CPU cores to enter a deep sleep state when idle. This is crucial for systems with variable workloads, such as inference servers that handle unpredictable traffic patterns.

- Specializing for Efficiency: Rather than relying on general-purpose CPUs, low-power head nodes are designed with specialized, energy-efficient processors. These architectures are specifically engineered to handle Al workloads with a lower power draw than traditional CPUs.

Reducing Data Transfer Costs: Energy is consumed with every bit of data moved within a system. Low-power head nodes can be designed with on-chip memory architectures that minimize energy-intensive off-chip data transfers. Emerging technologies, such as neuromorphic computing, which integrates memory directly into processing units, aim to eliminate data transfer altogether, representing the ultimate in power efficiency.

Market Trends: A Move Toward Specialized, Distributed Al

The shift toward low-power head nodes is not just a niche optimization; it’s part of a broader, transformative market trend in Al infrastructure.

1. The Rise of Specialized Al Accelerators

The era of one-size-fits-all Al hardware is over. The market is increasingly turning to specialized chips designed specifically for Al tasks. ASICs and FPGAs: Application-Specific Integrated Circuits (ASICs) and Field-Programmable Gate Arrays (FPGAs) are gaining traction because they can be precisely tailored for Al algorithms, offering a balance of high performance and low power consumption. For example, Intel’s Movidius Vision Processing Units (VPUs) are ASICs designed for energy-efficient computer vision at the edge.

2. The Power of ARM

Arm-based CPUs, once confined to mobile devices, are now moving into the data center. Their superior power efficiency makes them an attractive alternative to traditional x86 architectures for many cloud-based and server-side Al applications. AWS Graviton: Amazon Web Services has famously adopted Arm Neoverse CPUs for its Graviton systems, which have proven to be significantly more energy-efficient for many cloud data center workloads than their x86 counterparts.

3. Edge Computing and On-Device Al

The move to perform Al tasks locally on devices-known as edge computing—is a major force driving the demand for low-power head nodes. Instead of sending every query to a distant, energy-intensive data center, Al is happening closer to the source of data. Benefits of Edge Al: Beyond power efficiency, edge Al offers lower latency, greater data security, and reduced network bandwidth requirements, making it ideal for applications in autonomous systems and the Internet of Things (loT).

4. AI Orchestration and Resource Management

As AI platforms grow more complex, the need for intelligent resource management software is paramount. AI orchestration software dynamically allocates workloads to the most appropriate hardware, ensuring high utilization of expensive and powerful GPU resources.

Fujitsu’s Approach: Companies like Fujitsu are developing AI Computing Broker software that boosts GPU utilization by reallocating GPUs during the power-intensive pre- and post-processing phases, resulting in significant energy savings.

5. Neuromorphic and Advanced Architectures

The frontier of low-power AI lies in neuromorphic chips, which are inspired by the human brain. These chips aim to replicate neural networks in hardware with dramatically lower energy costs by integrating processing and memory. This field is still nascent, but it represents the long-term future of ultra-efficient, real-time AI.

Conclusion:

For enterprises navigating the rising energy demands of AI, the solution is not simply to buy more powerful hardware. The strategic deployment of a suitable low-power head node is a foundational step toward a more sustainable and cost-effective AI strategy. By optimizing utilization, specializing hardware, and embracing distributed and intelligent architectures, businesses can build AI systems that are not only more powerful but also more responsible stewards of energy and capital. The brain of the AI system may be its smallest component but optimizing it for power is proving to be one of the most intelligent moves an organization can make.