Ever dreamed of having a virtual agent protecting your online assets like a guardian angel or a bodyguard from your favourite videogame? Well, your dream might be about to come true.

Thanks to advances in Agentic AI, a form of AI that can mimic human intelligence more holistically and manage multiple workflows, virtual agents are now starting to be harnessed as yet another line of defence in the escalating cybersecurity landscape.

But can virtual AI agents really do as good a job as humans, you might be wondering?

And what might the overall impact of using Agentic AI be on the cybersecurity landscape in the long run?

After all, if history has taught us anything, it is surely that technology is fundamentally just a tool that can be harnessed by both good and bad actors.

To address some of these questions, and get an idea of what Agentic-AI powered cybersecurity might look like, we headed to Gartner’s Security and Risk Management Summit (22nd -24th September 2025) to find out what the experts think about this critical issue for the future of the industry.

Specifically, we heard from Eoin Tinchy, CEO of Tines, on best practices for secure and effective workflow automation, and from Leigh McMullen, Distinguished VP Analyst at Gartner, on whether or not cybersecurity is ready for AI agents.

We also spoke to Tim Bury, VP of Europe at Securonix, a 5x Gartner Magic Quadrant Leader in SIEM, and Cyrille Badeau, VP of International Sales at ThreatQuotient, a Securonix company, to find out how they are transforming SecOps with their Unified Defence SIEM powered by agentic AI.

What’s so special about Agentic AI?

Agentic AI is being hyped up in many industries, including cybersecurity, as one of the most exciting and transformative technologies emerging in the AI space. But before we get too carried away, let’s consider how this technology differs from other forms of AI such as ML and Generative AI.

Well first of all, Agentic AI differs from ML in terms of the architecture it is built on. Agentic AI is built on non-deterministic, event-driven architectures (EDAs), which are characterised by multi-dimensional workflows, unpredictable outcomes, and complex variables that are affected by event context.

ML, by contrast, is built on deterministic architectures that can best be understood as a kind of virtual assembly line; they mean that AI systems follow predefined, predictable processes that are preset by human software developers.

As McMullen explains, this event-driven architecture is what makes Agentic AI suitable for the more complex tasks of event/goal-driven systems as well as user interfaces (UIs) for virtual applications.

“Agent-based programming is for event-driven systems, or goal-driven systems.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

The most commonly encountered event-driven architectures are user interfaces (UIs) for virtual applications. But even basic versions of these architectures result in unpredictable events resulting from the complex interactions between different variables, simply due to the structure of the architecture.

“The first infinite loop you create in a UI [is] a cascading event. Cascading events are unpredictable events that occur as a result of complex systems and complex variables interacting with each other in ways that the developer can’t anticipate.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

For the majority of real-world use cases, this architecture quickly results in complex adaptive systems because of the amount of variables involved.

“The more variables you add, the more complicated it gets. And if you study chaos engineering, which I do, you know that basically any linear system becomes a complex adaptive system once you add more than say 10 variable to it, or once it starts to integrate more than 3 or 4 other systems. Then it becomes very difficult to anticipate all of the variable cascades that you will be dealing with. Especially if you’re dealing with something more than just the user interface, it could get complicated in a hurry, since most business processes themselves are event-driven.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

Thus, the event-driven architecture of Agentic AI opens up the autonomous management of far more complex systems than is possible under the static and deterministic workflows that characterise ML. For example, EDAs underlie the business models of several large and successful companies including Netflix and Uber, where the system interacts dynamically with user behaviour and other ‘events’ such as the completion of an action.

But event-driven architectures are not unique to Agentic AI. They are also used in Generative AI. For example, Apache Flink, a leading data-streaming engine, uses EDAs as a backbone for more advanced Large Language Models (LLMs) which are enhanced with vector databases. This enables the LLMs to perform more advanced functions such as retrieval-augmented generation (RAG), semantic search, and dynamic knowledge enrichment from real-time data.

So what is the difference between Agentic AI and Generative AI, you might be wondering. Given the multiple types of architectures used for LLMs, the key distinction here is less of a structural one, but more of an operational one.

Generative AI is optimised for single-task content creation, which means that it remains dependent on user inputs to produce results. By contrast, Agentic AI is goal-oriented, which enables it to function autonomously, and act proactively rather than just reactively.

So to summarise, what really sets Agentic AI apart is that it can manage workflows across multiple domains to achieve goals with minimal supervision. And this is where both the challenge and the almost human-like genius of Agentic AI systems lies, as McMullen points out.

“Multidomain agents pursue goals, and this is the hardest part because it involves goal reasoning.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

What is the value of Agentic AI for cybersecurity systems?

As you’d expect at a tech conference, the general mood towards AI’s adoption in the cybersecurity sector was pretty optimistic at the Security and Risk Summit.

So let’s first dig into the cause for this optimism, and unpack some of the benefits that Agentic AI brings to the cybersecurity sector.

Fundamentally, Agentic AI brings all the benefits associated with automation to more complex systems, resulting in a higher ROI threshold.

At Securonix, for example, a company whose journey with AI started approximately 20 years using ML algorithms for User Behaviour Entity Analytics (UBEA), there are 8 AI agents currently being deployed across its Unified Defence System to enhance productivity, essentially acting as assistants or seconds-in-command to human security analysts.

“The agents are there to detect threats faster, drive efficiency, simplify [tasks], reduce cost, and increase speed” ~ Tim Bury, VP of Europe at Securonix

According to Tinchy, the real benefit of Agentic AI comes from the more complex use-cases it opens up.

“Agentic workflows give us access to use-cases that were previously out of reach” ~ Eoin Tinchy, Tines CEO

By opening up the potential of automating more lucrative workflows, Agentic AI could address the ambiguity around the ROI of investing in AI for efficiency gains.

Indeed, according to a MIT study, 90% of businesses that have tried to adopt GenAI have failed to realise any significant impact on PNL, despite the fact that GenAI continues to be hailed as the defining transformative technology of our times. But this could be due to the often generic applications that AI is used for.

“When we talk about AI, we would break that down into what we call Agentic AI, because it’s really down to specific areas or roles. If you’re too generic about it, you lose the benefit” ~ Tim Bury, VP of Europe at Securonix

Similarly, Gartner Analyst Jeremy D’Hoinne emphasizes the importance of aligning cybersecurity objectives with specific use-cases where AI can bring about real value.

“AI requires increased cybersecurity investments, not less – and CISOs must ensure cybersecurity objectives drive AI use cases rather than adopting AI for its own sake.” ~ Jeremy D’Hoinne, Distinguished VP Analyst at Gartner

So what are some of these specific use-cases? Below we consider some of the roles for AI Agents at Securonix to get a better idea of where this technology can bring the greatest value within cybersecurity.

- Data Pipeline Management

“So we have a Data Pipeline Manager that enables you to tier data, which is essentially about cost-savings. The reason why you want an AI agent is to be able to do that more effectively by automating the decision process of the value of the data and what tier to put it into. The agent will essentially be making decisions about the flow of that data source, which is going to be based on maximum efficiency, which means maximum cost savings. So this is a massive evolution. And the AI agent is really helping from point of view of actually doing the grunt work, the low-level work. What we’re trying to do is take away those actions that no-one wants to do. The analysts don’t want to do it, so we get the agents to do it.” ~ Tim Bury, VP of Europe at Securonix

- Noise Cancellation

“Noise cancellation is another use-case for our agents. So by noise, what I’m talking about here is getting so many violations and alerts that you don’t know what to do with them. You don’t know which ones are important and which ones aren’t, so what we’re trying to do is reduce the number to only the ones that really should be investigated because the chances are they could be a positive breach attempt. So with the noise cancellation agent, the objective here is to be able to say, well, we know by looking at the past, that these kinds of incidents are actually not important and so we’re going to be able to strip those out so that you end up with a smaller and smaller number of alerts over time. And this is possible because the agent is learning what you have previously classified as being important and not important.” ~ Tim Bury, VP of Europe at Securonix

- Threat-Intel

“So the agents are there to identify trends and wake you up. For example, just imagine if we had our full team with absolutely nothing to do all day long. We would ask them to just randomly search and find trends. Nobody does that, of course, but AI agents can do that. AI agents can find a trend, and potentially something that is a real threat, based on what we know from our threat-intel, and based on all the weak signals we get that aren’t strong enough to trigger an alert.” ~ Cyrille Badeau, VP of International Sales at Securonix

- Agentic AI built to scale

Integration tasks are another use-case that Agentic AI can help out with. For example, Securonix recently acquired ThreatQuotient, which opens up a use case for AI agents to help further streamline the process of integrating into a company’s environment.

“There’s a natural case for other agents. ThreatQuotient will be fully integrated into Securonix, into our SIEM, and our platform. We now need to look at Agentic AI and ask how we can more effectively streamline integrations and faster. How can we do it with more intelligence, how do we make it more automated so that the humans using it are able to focus on acting more quickly with greater efficacy?” ~ Tim Bury, VP of Europe at Securonix

Nevertheless, as Badeau points out, the use-cases for AI agents are still emerging, and given the past trajectory of AI adoption, we can expect the use-cases for AI agents to look vastly different in several years’ time to how they look today.

“Even though we already have 8 [agents] in play, we are probably still far from identifying all the areas where AI will provide more value. I think I would be surprised if anybody in this space can guess what the best use-cases of AI are gonna be in a couple of years in our business. Everything is evolving a lot. What we were doing, for example, in threat-intel, background, and memory, and how it’s leveraged within SIEM today has nothing to do with what we were doing two years ago.” ~ Cyrille Badeau, VP of International Sales at Securonix

What are the risks of using Agentic AI in cybersecurity?

The security risks of Agentic AI have been pointed out by none less than the tech tycoon, OpenAI CEO Sam Altman himself.

“I think people are going to be slow to get comfortable with agentic AI in many ways, but I also [think] that even if some people are comfortable and some aren’t, we’re gonna have AI systems clicking around the Internet. And this is, I think, the most interesting and consequential safety challenge we have yet faced because [it is] AI that you give access to your systems, information the ability to click around your computer. Now, when AI makes mistakes, it’s much higher stakes.” ~ Sam Altman, extract from an interview at TED25.

So when it comes to the risk-oriented world of cybersecurity, you might be wondering if Agentic AI is the best idea. But before jumping to any conclusions, let’s look at the nature of these risks, and consider some ideas about how they can be mitigated.

As we explained earlier, Agentic AI is built on event-driven architecture. This is typically considered to be more secure against traditional hacking methods, as it has a less predictable structure than deterministic architectures, making it harder for hackers to exploit weakness. However, McMullen explains that the event-driven architecture of Agentic AI also makes it more vulnerable to manipulation by threat actors.

“The reason why old-school applications are hard to secure is because they are finite state machines. You have to define every state these machines are in, including all of their error states or false states. In event-driven systems that’s not a problem. If they are in a state they don’t understand they just ignore it. If someone gives them a stimulus they don’t understand, they just ignore it. And in many ways, event-driven systems and even agents can be a lot more secure in the face of traditional hacking methods, which typically involve finding vulnerabilities in the finite state machine that you can exploit to cause or initiate arbitrary action. But here’s the thing, you don’t hack agent-based systems, because you don’t have to. All you have to do is confuse them. All a threat actor really has to do to affect the performance and cause arbitrary action is to confuse the signal chain.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

In more complex systems where you might find multiple agents interacting with each other, this problem would only be exacerbated by the increasing number of entry points a threat actor could use to manipulate the system. Furthermore, the consequences of the manipulation would reach much further, since all the agents would be ‘basically prompting each other’, as McMullen put it.

“These agents are not going to exist in a vacuum like an aeroplane by itself. They’re going to exist in an ecosystem where they’ll be exchanging information with each other, and constantly creating new events and new stimuli in this ecosystem, which will change their arrangement and capabilities dynamically on the block.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

Beyond this, McMullen identifies 4 key security issues with Agentic AI. These are issues that would arise from the use of AI agents both within SecOps, but also within any business operations. Thus, with Agentic AI already being adopted across a range of industries, it is imperative to have an awareness of these issues and how they can be mitigated.

- AI Agents are virtual entities, which means that, just like anything digital, they are susceptible to impersonation.

“Virtual agents, their taskers, guardians and signals can all be impersonated. Because just in the same way that you can’t uniquely identify an electron, it is impossible to ultimately uniquely identify anything that is truly digital without going to some pretty extraordinary means, which we know the people designing these ecosystems aren’t doing.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

- AI agents are controlled remotely, which is a vulnerability because externally defined limits and controls can be (re)defined externally.

“Anything that can be remotely controlled by somebody can be remotely controlled by somebody else.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

- AI agents are goal-oriented, which makes them vulnerable to manipulation because people can hack goal assumptions, i.e. the understanding the AI agent has of what the goal means.

“People will not just hack goals, they will also hack goal assumptions. This is essentially manipulating two different senses of assumptions to redefine what the goal is. And it’s incredibly easy to do, simply because of data gravity; because there is so much conflicting data in our world that in many cases nudging a model to understand a goal in a different sense is a trivial matter.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

- The data transmission networks that Agentic AI uses will make traditional security measures such as APIs redundant.

“Agents, and complex agent networks will attempt to co-simplify tasks by communicating states. But ‘state’ might as well be a Trojan. When agents are exchanging prompts, which is exactly what they’re gonna do, they’re gonna be bypassing API security. Exchanging prompts basically lets us pass through context, and if we change the context we change the meaning.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

The future of SecOps: how can we simultaneously mitigate the risks and embrace the gains of Agentic AI?

In light of both the benefits and risks of Agentic AI that we have highlighted in this article, let’s finish up with some key takeaways for how these risks can best be mitigated, and some best practices to keep in mind for using Agentic AI responsibly in the cybersecurity landscape.

Below, we outline 4 key tenets of the secure and responsible management of Agentic AI: 1) an identity management system for virtual agents, 2) the use of a ‘guardian agent’ to monitor complex Agentic AI ecosystems, 3) the combination of large AI models with small AI models within a complex ecosystem, and 4) integration with human-in-the-loop workflows.

Identity management systems for virtual agents

Identity management systems address the problems that come from AI agents being fundamentally digital entities.

Just like any other digital assets which are typically protected through data encryption, AI agents should have some form by which they can be recognised and validated as legitimate digital entities. This would both ensure the safety of online users interacting with AI agents, and protect the liability of the companies using AI agents to carry out various digital processes.

“We’re probably going to need some kind of identity management for agents. Some kind of wall-based access control and fine-grained policies for agents to counteract impersonation.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

‘Guardian agents’ to monitor complex Agentic AI ecosystems

The idea behind having a meta-system whereby a system of AI agents is monitored by another AI agent is really about addressing the issue of accountability.

While, for the foreseeable future, Agentic AI systems will remain ultimately answerable to humans, the benefit of having an additional, ‘guardian agent’ to monitor Agentic AI ecosystems would be to pick up on any anomalies occurring in the system more efficiently, which could then be flagged to a human agent. This would ultimately reduce the workload of the human analyst and act as a status indicator of the health and performance of the overall system.

“There’s the idea of the guardian agent, which, like a master chef, can oversee the process and what’s happening. I don’t believe in a consensus model because consensus models of AI are too expensive. Instead, the guardian agent would be like a generative adversarial network which would learn the appropriate behaviours just by observation, and then mention when something was an anomaly.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

Combining large AI models with small AI models

The importance of having small AI models working alongside larger models comes from the fact that as a model’s parameter count grows, so does its uncertainty. This essentially means that small models are harder to fool, and are thus more resilient against manipulation from threat actors, especially in the form of goal-assumption hacking.

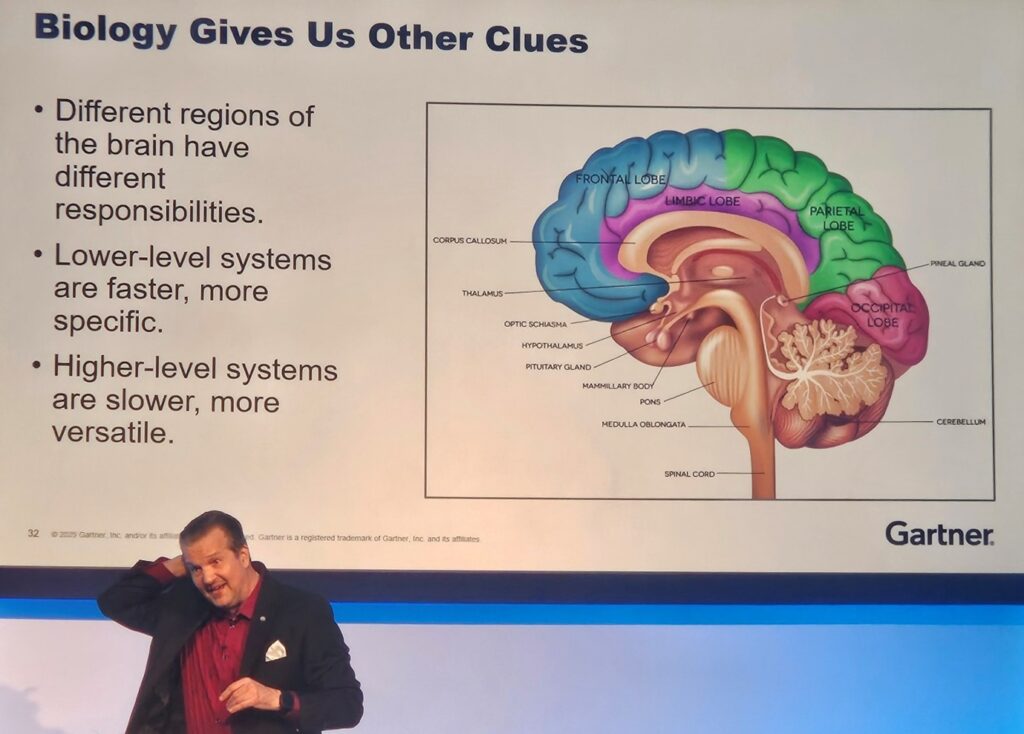

And there is good reason to believe that the sturdiness of small models would enhance the performance of the overall Agentic AI ecosystem by making sure that the larger models remain on the right track. After all, this is essentially how the most evolved intelligence engine we know of, the human brain, works.

“The best metaphor for this is the human brain. For example, your amygdala, a part of the brain that operates your fight-or-flight response, operates 50,000x faster than the prefrontal cortex, the part of your brain that makes decisions. That’s why you literally can’t think straight when you’re angry. And this tells us about the value of smaller models. You could have collections of small models working together in an ecosystem with larger models, because just like the amygdala, these smaller models very hard to fool, because what they care about is much narrower. And so this ecosystem could evolve in a similar way to how the brain has evolved, which essentially works on a consensus model.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

Human-in-the-loop workflows

Last but not least is perhaps most important tenet of all: the notion of integrating Agentic AI with human-in-the-loop workflows. This is because, while Agentic AI arguably mimics the holistic nature of human intelligence more accurately than any other form of AI, it is by no means at the level where it can be entrusted to take full responsibility for the actions of a human individual let alone an organisation.

“We are not at this stage going to just let an AI agent make decisions on behalf of the organisation that will bear the consequences of those decisions. There may be times in the future where some things can be so fully automated that there’s no risk of AI agents making those decisions, because a human has said, if you see this kind of situation, its ok to take action. But primarily, humans are going to be the ones that are ultimately reviewing and saying yes, this is the action we require and I’m going to investigate this now.”

“For all the agents that we are creating, we can’t absolve our responsibility, so we still need to have what we call human-in-the-loop. So the humans are still gonna make the decisions, but what we’re trying to do is help them make the right decisions at a quicker pace, which will drive greater efficiency and cost-savings.” ~ Tim Bury, VP of Europe at Securonix

Human-in-the-loop workflows are also fundamental to the future of work, ensuring that human roles are not completely wiped out by the sweeping tide of automation that are impacting companies of all industries. Indeed, as lower-level, menial tasks are becoming increasingly overtaken by AI, the role of humans is increasingly becoming one of strategic planning, concerned with the bigger picture of what companies are trying to achieve both in the here-and-now, and in the future.

“Human-led workflows are vital when it comes to strategic planning” ~ Eoin Hinchy, Tines CEO

Indeed, the place of humans within the future of work is by no means just a nice-to-have. Human-in-the-loop workflows are a necessity primarily because of the limitations of the technology itself.

As McMullen points out, AI has a fundamental scalability problem: you cannot scale a feedback loop or exception handling of a business process without giving AI enough agency to disobey you.

“An exception handling loop means doing something different to the standard business processes. This is really important as businesses are running headlong into this GenAI space. They’re not seeing that one little point, that you’re not going to get scalability out of the part of the feedback loop where you probably want the most scalability, which is middle management. And this is the part that costs us the most money, that remains the least manageable part of every enterprise.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner

And this cannot be fixed by simply imposing guardrails, and training the AI not to cross certain lines. This is because non-deterministic AI cannot enforce guardrails on itself.

“At the end of every non-deterministic or probabilistic reasoning chain, there must be a determinative control. You have to operate these things as you would operate people who have no fear of consequences.” ~ Leigh McMullen, Distinguished VP Analyst at Gartner