- 9 out of 10 organisations use multiple types of testing, including UX, accessibility and payment testing

- Nearly a third of organisations rely on partners to overcome testing challenges

- Another third leverage ‘crowdtesting’ to mitigate AI risks and provide comprehensive quality

Berlin, DACH & Boston, Mass, US – September 17, 2025: Applause, a leader in digital quality and crowdsourced testing, has published its fourth annual The State of Digital Quality in Functional Testing 2025 report, which shows that although AI usage for functional software testing has more than doubled in the past year, a staggering 92% of organisations find it challenging to keep pace with rapidly changing requirements. With the sharp increase in AI-powered functional testing, organisations maintain that keeping humans in the loop (HITL) is integral to quality assurance (QA).

Based on a global survey of more than 2,100 software development and testing professionals, the report explores AI testing use cases and challenges, the metrics used to assess digital quality, and how organisations are embracing QA earlier in development.

Customer satisfaction and customer sentiment/feedback are the top metrics for assessing software quality. In terms of testing types, user experience (UX) testing is the most popular at 68%. Usability testing (59%), which measures ease-of-use, and user acceptance testing or UAT (54%) are also preferred.

Significant testing challenges persist, despite AI efficiencies

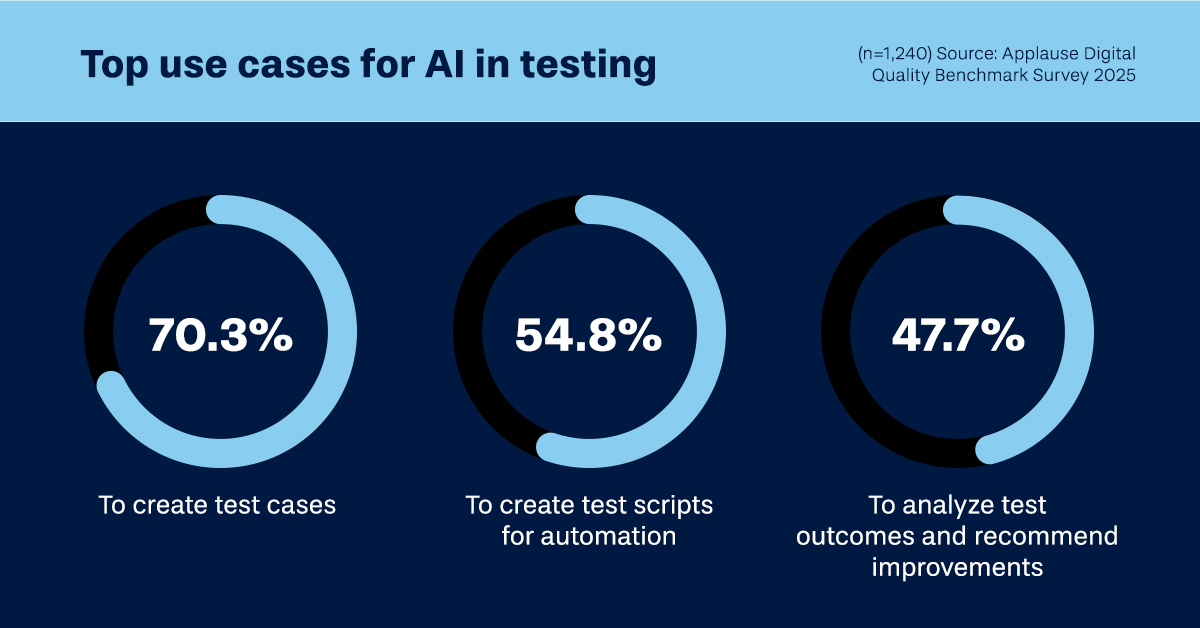

When it came to AI, 60% of respondents reported their organisations use AI in the testing process. This contrasts with the 2024 survey that revealed only 30% were using AI to build test cases – and just under 32% were using it for reporting. Examples include leveraging AI to develop test cases (70%), automate test scripts (55%) and analyse results/recommend improvements (48%). Other use cases include test case prioritisation, autonomous test execution/adaptation, finding gaps in coverage and self-healing test automation.

Despite the swift rise in adoption, 80% of respondents lack in-house AI testing expertise, and nearly a third of them lean on a partner to overcome testing challenges. Additional obstacles include inconsistent/unstable environments (87%) and lack of time for sufficient testing (85%).

AI and automation alone cannot provide the end-to-end test coverage enterprises demand. A third of survey respondents (33%) leverage crowdtesting – an effective approach to bridging gaps and mitigating agentic AI’s risks through HITL test coverage.

Organisations adopt a shift-left approach to QA

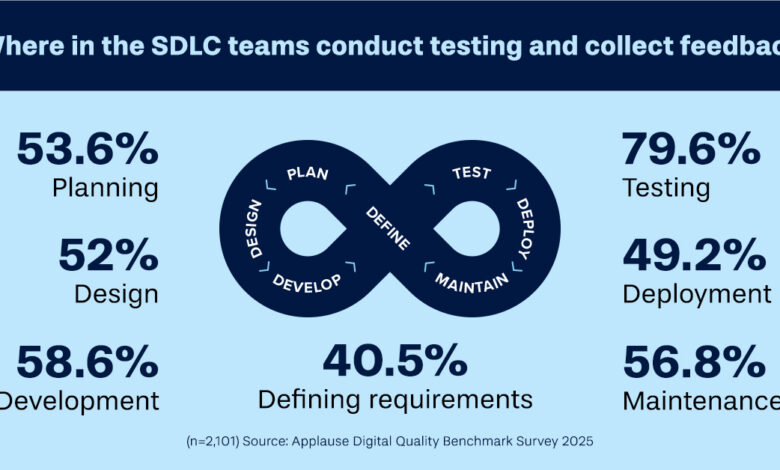

While a previous survey found 42% of respondents only test at a single stage of the software development lifecycle (SDLC), this year just 15% limit testing to a single stage. Over half of organisations address QA during the planning, design and maintenance phases of the SDLC.

In addition, 91% of respondents reported their team conducts multiple types of functional tests, including performance testing, user experience (UX) testing, accessibility testing, payment testing and more.

“Software quality assurance has always been a moving target,” said Rob Mason, Chief Technology Officer, Applause. “And, as our report reveals, development organisations are leaning more on generative and agentic AI solutions to drive QA efforts. To meet increasing user expectations while managing AI risks, it’s critical to assess and evaluate the tools, processes and capabilities we’re using for QA on an ongoing basis – before even thinking about testing the apps and websites themselves.”

Mason added: “Agentic AI requires human intervention to avoid quality issues that have the potential to do serious harm, given the speed and scale at which agents operate. The trick is to embed human influence and safeguards early and throughout development without slowing down the process, and we know this is achievable given the results of our survey and our own experiences working with global enterprises that have been at the forefront of AI integration.”