At the OpenText World Conference at the Queen Elizabeth Centre in London, CEO and CTO Mark Barrenechea and CPO Muhi Majzoub showcased the updates it will be rolling out to customers in its Aviator Suite update CE 24.2. One area that took my interest was how Muhi has taken the new releases of OpenText’s software and product releases from 18-24 months to a 90-day or quarterly cycle with plans of “eventually getting to a 30-day cycle” since taking on the role 10+ years ago.

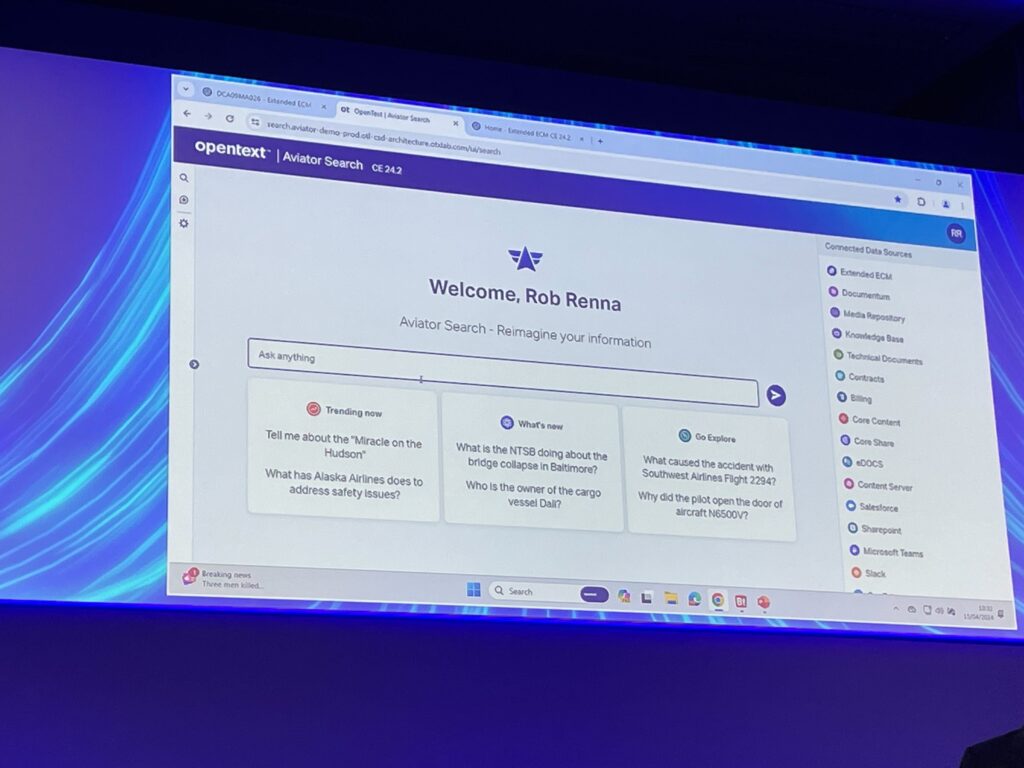

OpenText’s offerings which are packaged up in the Aviator suite, leverage advanced technologies like machine learning (ML) and natural language processing (NLP) to drive efficiency, data-driven decision-making, and sustainability for customers across industries such as Hyatt, RBC, Heineken, L’Oreal, Santander, and Airbus.

There were two fireside-styled conversations in the morning with OpenText customers. The first one was Mark Barrenechea and Sri Kanisapakkam from Nationwide Bank. The second was Muhi Majzoub having a conversation with Leon Van Niekerk from Pick N Pay.

A focus of the demos was the importance of how businesses need to put the importance of putting AI into workflows that solve a challenge or present an opportunity for its customers. Particularly in sectors such as manufacturing, which was relevant to the conversation between Muhi and Leon, and in banking which was relevant to the conversation between Mark and Sri.

Sri from Nationwide highlighted in the conversation how technology is moving fast and the impact that has on making processes, in his case banking, are safe to use and that the proper precautions have been taken before putting AI into a live environment.

Testimony from Leon Van Niekerk of Pick N Pay highlighted the tangible benefits of OpenText’s solutions, with the company able to “bring an onboarding process down by 5-6 weeks as the cases are there for them to learn, we don’t need to write them.”

Mark J. Barrenechea emphasized the impact AI is going to have stating “This is 10x the internet boom.”

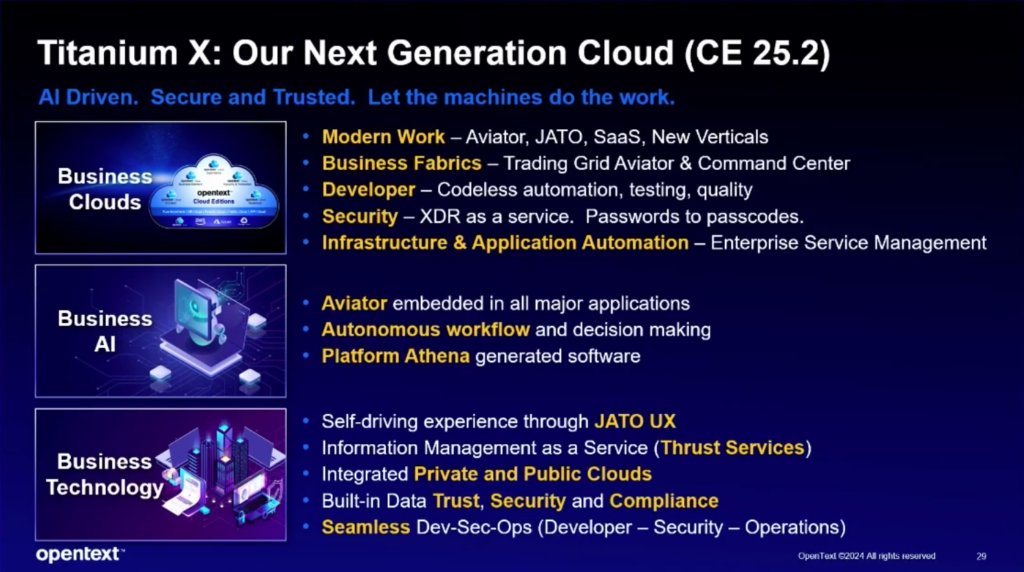

TitaniumX

OpenText’s planned roadmap for the TitaniumX platform exemplifies this approach. With built-in AI for different uses from security, faster testing and quality control, autonomous workflows, and integrating different clouds. It’s scheduled to be released in Cloud Edition 25.2, which means it will be Quarter 2 in 2025. So by the time we hopefully get invited back next year and to the Las Vegas version in November, we will have more information and demos to look at.

I don’t understand why they are adding this new name separate from the other names such as Content Aviator, DevOps, and Aviator IoT. I honestly don’t know what resemblance Titanium or the letter X has to do with anything they have produced so far. And even less idea of what ‘Platform Athena’ means. Need to take a lesson from Apple and Steve Jobs thinking on that. Apple had a board over one of the entrances to the Menlo Park campus that said ‘Simplify, Simplify, Simplify, Simplify’

Saying that the platform capabilities look helpful. It seems from what Muhi said in a private roundtable that customers will eventually be able to use OpenText as a digital hub without the need for dozens of separate tools or subscriptions to scale its AI needs. By enabling organizations to “let the machines do the job,” TitaniumX promises to unlock productivity gains, cost savings, and a competitive edge in an increasingly data-driven business landscape.

The event flagged the importance of responsible and ethical AI development. “We must ensure the clean and ethical use of green technology to reduce energy consumption and make a positive impact on the environment,” Mark Barrenechea, CEO and CTO stressed. As AI systems become more ubiquitous, their carbon footprint cannot be ignored, and OpenText’s solutions, as shown in the demos aim to strike a balance between innovation and sustainability.

Mark followed this by saying he believes in “never deleting anything, never wasting anything” which is somewhat contradictory to its efforts to reduce energy consumption through storage. And for a company with roughly 50,000 active customers being serviced by the power of its 2 twin data centers, 13 core data centers, and 8 satellite Points of Presence (POPs), that’s a lot of data to actively keep racking up in storage.

On the other hand (in Jon Fortt’s voice), what Mark seemed to be saying was don’t create something in the first place that’s not going to be valuable. Similar to when you’re hungry which leads you to overordering when you get to ordering food and then can’t eat it all creating waste.

And Bertrand Piccard, the guest speaker who’s accomplished first-of-their-kind feats such as traversing the globe in a hot air balloon, said similarly about how much less of a problem climate change would be if we only consumed and used what we need to.

How to handle increasing amounts of information

Information management emerged as a crucial component of OpenText’s enterprise offerings. Muhi Majzoub, Chief Technology Officer at OpenText, highlighted the company’s commitment to this area, stating, “Information management is informative for the enterprise. We’re standing behind an SLA framework and taking on the risk of the penalties from the cloud.”

This is what was labeled as ‘Data Cloud at Scale’ which will be how it plans to create industry or use case-specific language models for its customer’s unique challenges while ensuring they are safe and stress tested at length.

OpenText’s robust infrastructure, spanning 3 twin data centers, 13 core data centers, and 8 satellite Points of Presence (POPs), enables it to handle and process over 100 million documents securely and efficiently, according to the demo and presentation. This capability is particularly valuable in an era where data is a strategic asset, and enterprises have to navigate complex regulatory landscapes across borders. And even more for certain industries such as banking or healthcare.

“There will be 1,000+ language models” Barrenechea explained, going on to say that around 80% of OpenText’s R&D investment, which is close to $1 billion is dedicated to preparing for handling this eventuality.

From my personal point of view, I said to Mark that I think there will be more like 1 million if not 1 billion language models, either large, medium, or small, in the next 10-15 years. This is due to how computing power will become cheaper, coding will become more automated, and data is made more AI-ready from the start.

Muhi gave a great example of how within less than 10 minutes and a few simple prompts he was able to create 700+ lines of unique code at close to 100% accuracy which would have taken a decent full-time coder hours to write if not days. And that was while he was sitting on the sofa watching a football game in the background.

Muhi explained to The AI Journal how they will never put a large language model such as ChatGPT by OpenAI, Claude by Anthropic, or Le Chat by Mistral AI into production either in a live or test environment. This makes it interesting how the Copilot they have created in Aviator can form responses purely from on-prem and private clouds.

Additionally, Muhi explained how he has made it a policy and mandated that no employee of OpenText is to use its company data, customer, or partner data in a large language model. Specifically because of the risk of bad actors and risk to the integrity of data or adding to efforts by hackers to overcome its cyber security safeguards.

OpenText’s focus on combating deepfakes and misinformation aligns with enterprises’ growing concerns over data integrity and trust. Mark gave a funny but also cautionary example of this with a deepfake video of him requesting a transfer of funds and then translating it into different languages as complicated as Japanese with ease.

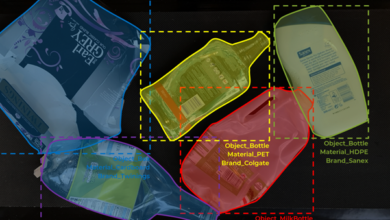

It’s an area where Mark, Muhi, and other members of the team invited up on stage to demo the platform to the crowd to emphasize how its Aviator suite offers tools for tracking and managing assets across various sectors to enable customers to monitor and verify data sources, from lorry temperatures to IoT devices in hospital settings.

Final thoughts from OpenText World

Looking ahead, OpenText’s vision aligns with industry leaders’ calls for leveraging AI to drive sustainability and social impact. It’s something guest speaker Bertrand Piccard shared his vision of using AI to create a “smart grid” that can efficiently distribute energy, education, and water resources based on real-time demand. “AI gives us the possibility to innovate and impact three areas required: curiosity, perseverance, and respect,” he stated.

Overall, OpenText World painted a compelling picture of an enterprise-focused AI company committed to enabling digital transformation while prioritizing ethical and sustainable practices. As businesses across industries grapple with the challenges and opportunities posed by AI, OpenText’s solutions and approach position it as a valuable partner for those seeking to adopt these transformative technologies strategically and responsibly.

We’re excited to see what happens with development for its Aviator suite comprised of Search, Content, IoT, and DevOps. Something i’m sure we will be using as reference points in upcoming stories and whitepapers. Let’s see if we get invited to the main flagship show on November 24 hosted in Vegas to see what progress and developments have happened for Cloud Edition 24.4.

And now I’ll leave you with a quote in which Mark Barrenechea, the CEO and CTO of OpenText left the audience and myself with a closing line to show its ambition, excitement, and principled approach to what AI is going to bring: “Let’s go create some digital lightning bolts together”