Bob K. had not been on Facebook for years. When he went on the site, he found a picture of a jet fighter. Fascinated, he let his mouse hover over the machine guns and rocket launchers. More photos—at least, what he thought were photos—appeared. All of the jet aircraft. Again, he lovingly let his mouse hover over the features he found most interesting.

An hour later, he was still on the website. But now images of aircraft were emerging in a continuous scroll that by no scope of the imagination could be considered real.

One of the last ones he looked at, before closing his laptop, was a phantasmagoric machine with a huge array of missiles, machine guns, and other weaponry coiled up and spilling out of the center of the aircraft like two great eyes looking at him.

The problem was that the collection of armaments was several times larger than the aircraft itself.

“It looked like a sparrow trying to carry a half dozen boa constrictors in its tiny claws,” he said.

Clearly, no such aircraft existed on Earth.

“That was some imagination,” he said, assuming the artist was human.

An unexpected discovery?

Recording his experience was one part of the research I conducted over half a year about Facebook and AI. I had been invited by a leading library association in North America to join a panel about the impact of AI on libraries and journalism. While my immediate goal was to frame an understanding of those questions, the Facebook issue came up along the way, inadvertently.

Facebook and AI

After six months of research, including interviews and attempting to relate them to the most recent scholarship about Facebook, I came up with the hypothesis that Facebook was indeed using AI to shape users’ experiences—but not in the way the company had claimed.

According to Facebook, it now uses AI to enhance a practice it has been following for almost as long as it has been world-spanning—personalizing the user’s experience by feeding him what he wants to see. This is already widely recognized.

“Facebook has extensively utilized artificial intelligence (AI) in various aspects of its platform, shaping its development and enhancing user experiences,” wrote Umamaheswararao Seelam, an assistant professor in the Department of Metallurgical and Material Engineering at the National Institute of Technology, Warangal, in a personal post.

My reporting and potential consequences

But what I seemed to have found was that Facebook appears to be tracking mouse hovers and using AI to create new content—rather than simply collating existing content—and feeding this unreal content to the user to target his vulnerabilities.

Meta, the parent company of Facebook, did not respond to requests to comment sent by email earlier in April.

Nor did it respond to further questions about what this could mean in the long run, to wit:

- If, indeed, AI is being used to create artificial content specifically targeted to an individual user, what would stop Facebook from eventually delivering a “user experience” that would eventually become so unique for each individual on the platform that there would no longer be any common language, culture, or medium of communication?

- And, if the individual content becomes increasingly arousing and attractive, heightened by the increasing power of AI—which ferrets out our vulnerabilities—what is to prevent the political and social discord around the world from increasing, fueled by social media?

Getting out in front of regulating AI?

At the same time, Meta, the company that owns Facebook, has recently been calling for controls on the use of AI on the internet. The U.S. Congress is also calling for such regulation.

Meta is calling for industry-wide standards to make the use of AI transparent—such as by watermarking any content created by AI.

While the efforts may not be fully developed, Meta says it is necessary to make a start. Recent scandals involving deepfakes of President Joe Biden or AI-generated simulacra of high school girls in compromising situations—sexually explicit artificial videos that shame and harm the real victims—expose the danger of AI online. “While this is not the perfect answer,” Nick Clegg, president of global affairs at Meta told the New York Times in February. “We did not want to let perfect be the enemy of the good.”

But if Facebook is already using AI, why would it want to be at the forefront of regulating it?

A single goal: keep the user chained to the screen

For an answer, even a hypothetical one, we need to look at how we might understand Bob’s—and other user’s experiences on Facebook. Bob’s experience raises the following question: Is Facebook simply using AI to collate already-existing content? Or could it be that Facebook is using AI to personalize a user’s experience by fabricating content entirely—creating appealing images that don’t even exist in the real world?

If true, this would be an ingenious application. Johann Hari, a researcher who has written about the dangers of Facebook and other platforms, has shown that the design of technology, such as smartphones and Internet sites like Facebook, has a single end in mind: to keep the user engaged as long as possible.

This enables the sale of advertising, which migrates to sites that keep the user chained to their screens the longest.

With AI, such results would be multiplied exponentially.

Using dopamine: fear and desire

Attracting the user works by creating content that stimulates the release of dopamine, one of the most powerful mind-sweetening chemicals, often associated with pleasurable activities, and also the target of the multi-billion-dollar pharmaceutical industry, according to Hari.

Yet psychologists have found that the most enthralling experiences are not necessarily those of pure pleasure.

In other words, it’s not merely the chocolate bar or the first kiss that stimulates obsession. Rather, in a well-known study, researchers found that the presence of anxiety, even fear or terror, can heighten feelings of attachment.

In the study, men were asked to walk over a swaying and narrow suspension bridge before meeting a woman.

They were compared with a group—the control group—that did not first walk over the bridge.

By an overwhelming percentage, the men who had met a woman in the middle of the shaking, quivering bridge were much more likely to form an attachment with her than those who met new partners under relatively safe circumstances.

Voodoo dolls

Such findings were not lost on the engineers who design the powerful algorithms that shape the user experience on sites such as Facebook, according to Hari and others.

“Google and Facebook, by collecting and analyzing years and decades of our search queries, emails, and other data, have compiled voodoo dolls of each of us…Every message, status update, or Google search you conduct is analyzed, cataloged, and stored. These companies are constructing detailed profiles to then sell to advertisers who want to target you specifically,” wrote Jes Oliphant, in an analysis of “Stolen Focus.”

“Starting in 2014, Gmail’s automated systems would sift through your emails to create a personalized advertising profile. If, for instance, you tell your mother you need diapers, Gmail registers you as a parent and starts targeting you with baby product ads. Mention ‘arthritis’ and you’ll likely start seeing ads for relevant treatments,” he wrote.

Knowing our weaknesses

These profiles know our vulnerabilities, our passions, our fears, what titillates us, and what will scare us.

Users will feel that their minds are being read, wrote Hari, when advertisements pop up that seem almost to correlate with our thoughts.

What is actually happening is that the models of us are so predictive that they can guess our future behavior.

“Aza [Raskin, a leader in early coding] described this process using the metaphor of a voodoo doll. Inside Facebook and Google’s servers, there’s a model of you, initially rudimentary. This model is progressively refined using all the metadata you might deem inconsequential. The result is an eerily accurate digital doppelgänger,” wrote Oliphant.

Tracking our mouse hovers

The models are also addictive.

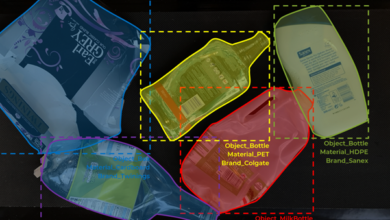

Other researchers have found that internet sites can track the movements of our mouses, including how long we stay on a particular image. Some contend, that by the positioning of our mouses, algorithms can predict or “read” what part of the image has most attracted us. As early as 2018, as part of congressional testimony, Facebook admitted it tracks movements to gather more information about the user.

This appears to have been the case with Bob.

Addiction

And yet, another user’s experience seemed to illustrate this feature even more vividly.

Stephen R. (like Bob, he asked for his name to be redacted for privacy reasons) had a similar, but much more frightening—or thrilling—experience.

The college counselor had stayed away from Facebook for a decade after he found his students would look up his postings on social media.

But a fight with his wife led him to plunge back in to see if he could see photos of them from a happier time.

What happened next, he is still trying to shake out of his mind, he told me.

It started innocently. Stephen had been a big fan of comic strips and used to search online for information about first editions.

So he wasn’t surprised when comic strips started popping up in his feed.

He knew, like most people who have some understanding of how tech companies work, that Google and Facebook create profiles of users’ preferences and interests—mostly for advertising purposes. In the case of Facebook, the company has stated that it keeps track of what its customers like to feed them what would interest them.

Another case study: explicit images

So when Stephen started seeing Archie comic strips, he wasn’t surprised.

(Archie was a clean-cut comic strip created in the 1940s in the U.S.).

But soon the content began to change—just as the images of airplanes had for Bob.

The Archie comics became more and more explicit.

Someone, he thought—again erroneously—had been redrawing the comics so that they now had erotic themes.

There were three that kept repeating.

Despite himself, he was drawn to linger on each of them.

Betty on Archie’s lap

In one, Betty (the blonde heroine) sat in a short skirt hiked almost up to her waist on the lap of Archie on a park bench.

“I guess you’re not going to make it to your date with Veronica,” she said, as sweat beads flew out from her head, referring to her rival.

Stephen said he looked at the image aghast. It didn’t look like Betty was enjoying it. But rather she was scrunching her rear end into Archie’s lap merely to prevent him from consummating his love with Veronica.

Betty on the beach

Another image popped up—they became seared on his mind.

This was again of Betty with her legs spread, viewed from the rear, straddling and hugging Archie on a beach. She had her bikini bottom on, but no top.

“You could not see anything really,” he told me. But it appeared, again, Betty was almost entrapping Archie with her full, plump body.

Veronica exposed

Another image showed Archie at a picnic with Veronica, his true love.

Only, in this comic, she was sitting with her panties off, flung to the side.

With the idea of looking for photos of him and his wife forgotten, Stephen said he looked at those three comic strips as they appeared again and again in his feed.

As he watched Betty strain to pleasure Archie while sitting on top of him, or smother him with her breasts as she straddled him, or watch Veronica sit without her pantries, he felt a mixture of fear, fascination, horror, and delight.

As he finally left the screen and went to bed, he told me, he could not get the images out of his mind.

It was like he had seen the ocular image of a drug.

Something new: a composite of all three

Finally, after struggling for several days, he went back to Facebook.

The first thing that hit his eyes was a totally new comic strip.

This time, it combined all the most potent elements of the previous three comic strips—which were now nowhere to be seen.

Instead of three separate comic strips, there was now a single one that riveted his eyes to the screen as never before.

It contained the three most powerful and magnetic aspects of each of the earlier drawings.

In this one, Betty was now straddling Archie on the park bench. And she had no panties.

It was a composite of the earlier images.

“That’s when I started to think something more was going on than just seeing drawings that some artist had done,” he said. “Had the algorithm been reading my mouse hovers?”

Stephen, like Bob before him, had unwittingly gotten a taste of how Facebook may be using AI to personalize the user experience by crafting unreal content.

Anything can be altered?

But what does this all mean, and what might it portend for the future?

If, indeed, our experiences online are being shaped and manipulated by AI images, images that are fashioned by an algorithm that knows precisely what will thrill us the most, then increasingly each individual’s experience online may become unique.

There may be no more common language or common content that anyone can see.

AI may subtly shape and shift—like Photoshop does with photos—all images coming our way to bend them into unique pictures or videos to fascinate and enthrall us.

This is, of course, a supposition.

But what’s to stop AI algorithms from rewriting news stories for each individual, altering the contents so that they stimulate and excite each person in unique ways that will keep him chained to his screen?

Epilogue: a conjecture

If that happened, it would be like a drug dealer handing out drugs attuned to our individual DNA to give the greatest high possible.

Could we end up isolated in our own pleasure and horror world with no way to communicate with others?

The only common language that would remain would be that of strong emotion. But aren’t we halfway there already?

More than one-third of the people on earth use Facebook—that is, over 3 billion people.

If each of them is being chained to the site through a deep understanding of their vulnerabilities, what’s to stop them from remaining continuously roused, thrilled, and even traumatized?

Soon, maybe, half the planet will no longer be thinking with their minds, but with their passions. Is it any wonder that wars, turmoil, and conflict have been increasing?