A widening workforce gap and outdated systems threaten the quality of care American patients receive. With demand far outpacing the supply of doctors, nurses, and support staff, overburdened legacy systems struggle to meet expectations.

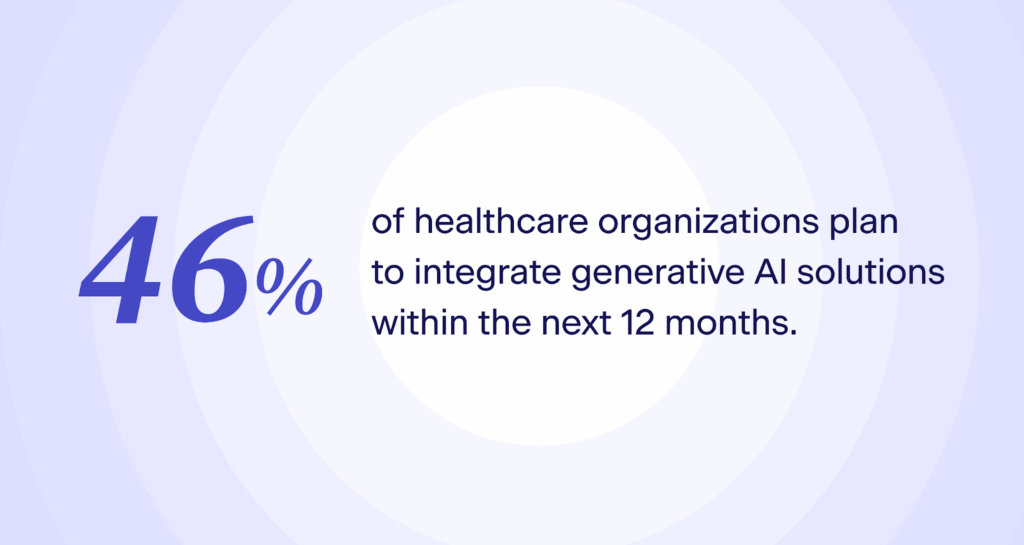

The quest for a better patient experience for less operational resources has turned the spotlight on Generative AI (Gen AI). Anticipation across the sector is high for a wave of Gen AI-powered innovations across diverse healthcare applications this year, with 46% of healthcare organizations planning to integrate generative AI solutions into their workflows within the next 12 months.

However, while market acceptance is promising, concerns naturally arise with such disruptive technologies in a heavily regulated industry.

Gen AI has the potential to push healthcare forward for both patients and professionals. But it can also pose risks if not implemented cautiously. And though it is safe to expect an influx of Gen AI use cases, such as AI-powered voice and web assistants, development must prioritize three key principles: explainability, compliance, and control.

The Forces at Work

With the arrival of Gen AI, the healthcare landscape is primed for significant change. Over the next year, four key drivers are set to propel AI deeper into medical practice, shaping its future and its influence on patients.

The first is the need for better patient care and efficient treatment. Healthcare systems often fail to deliver prompt and effective care due to overwhelming patient volumes and backlogs. This is where Gen AI can step in, revolutionizing patient care with its data analysis speed. From crafting individualized treatment plans that address unique needs to accelerating drug development and enhancing medical imaging and diagnostic accuracy, Gen AI could empower healthcare providers with the tools to deliver flexible, personalized solutions for each patient. By providing granular, patient-specific data, Gen AI could ensure healthcare professionals have deeper insights, leading to improved outcomes and a future of truly personalized medicine.

The second is the demand for patient-centric experiences and engagement. Patients are tired of dealing with frustrating interactions throughout their healthcare journey. They crave the same level of convenience and ease they experience when shopping online. Thankfully, the industry is starting to embrace innovative technologies such as AI-powered virtual assistants. These tools will be game-changers for managing appointments, accessing lab results, and communicating with healthcare providers in a clear and efficient way. Not only will this benefit patients, but it will also free up overworked healthcare professionals to focus on their core duties and deliver even better service.

As healthcare organizations recalibrate their technology postures, a strategic realignment for cost savings and innovation has grown equally paramount. Automation, electronic health record integration, and tailored business solutions are among several crucial developments well positioned to foster cost-effective healthcare delivery methods. By understanding the unique business needs of healthcare networks and leveraging the appropriate technologies, organizations will be better able to formulate data-driven strategies that can generate significant cost savings.

Underlying all of this is the push for greater efficiency and technology adoption. Healthcare organizations are gradually ramping up Gen AI integration to enhance efficiency across multiple verticals – from business intelligence tools to marketing to IT security. Wider adoption in the year ahead is expected to bolster content moderation and administrative use cases, laying the groundwork for advances in clinical applications. The journey to achieve personalized medicine is guided by risk-aware decision-making, regulatory support, and built-in reduction procedures in the AI systems themselves.

The Key for Responsible AI

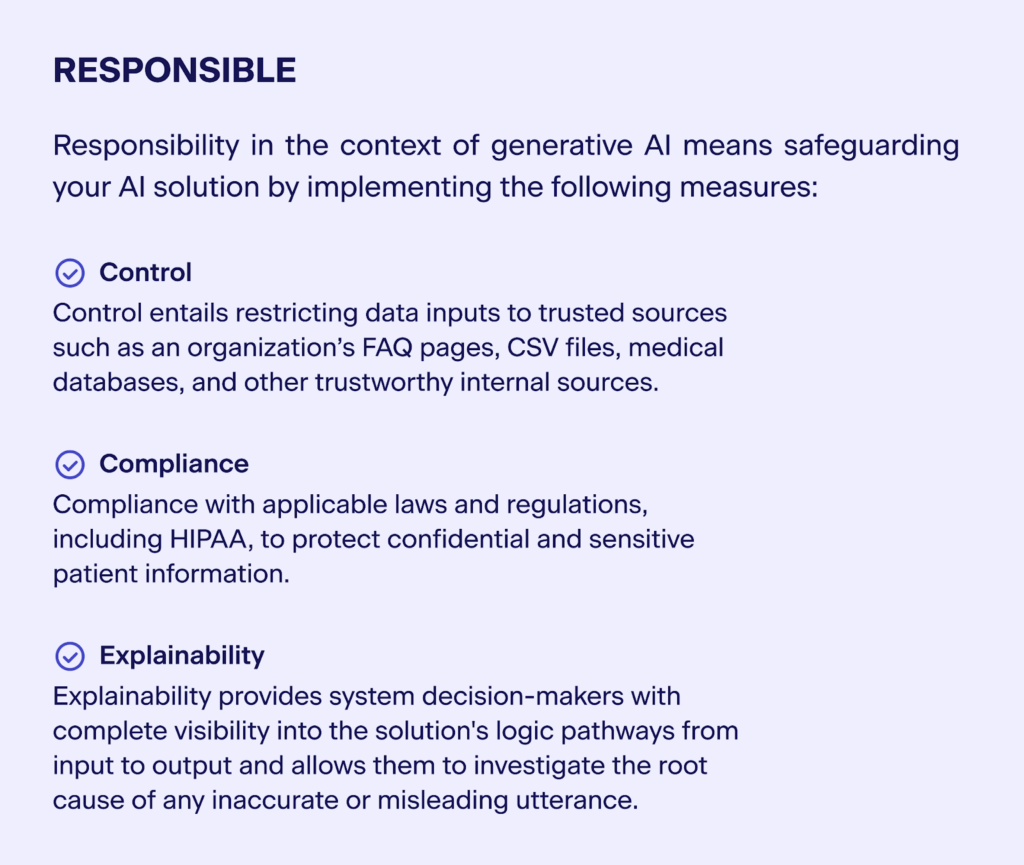

While AI adoption in healthcare offers significant benefits, safeguarding patient privacy and data integrity remains critical. The sensitive nature of health data necessitates robust explainability, control, and compliance mechanisms for responsible AI implementation.

Explainability

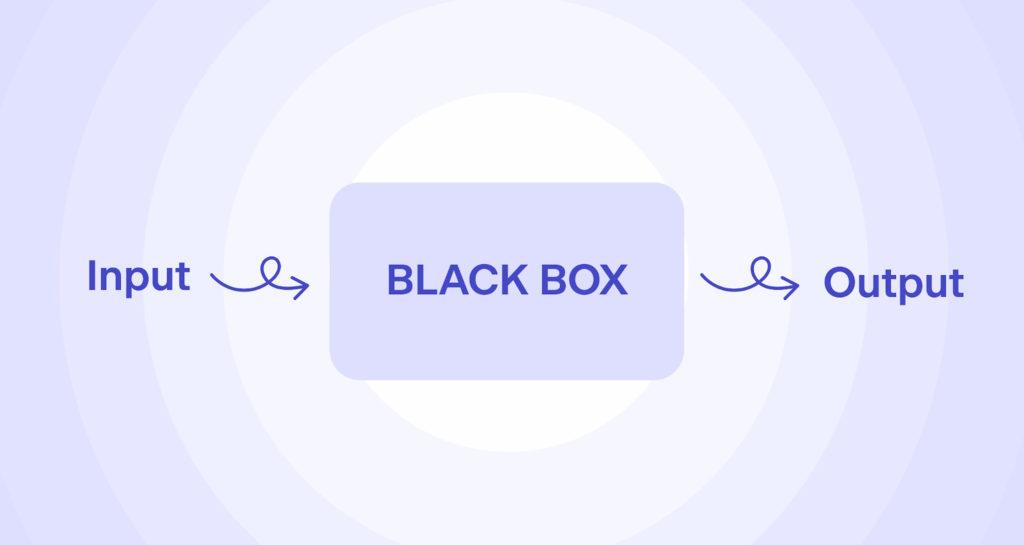

The opaque nature of machine learning models poses a significant barrier to integrating conversational generative AI assistants into healthcare. They’re essentially opaque ‘black boxes’. While these models can provide valuable outputs, their susceptibility to outdated data and manipulation can result in inaccurate responses. Unfortunately, when such failures occur, there’s no built-in accountability mechanism that would allow us to investigate the root cause of an erroneous output, making it impossible to pinpoint the root cause of the error and rectify it.

Explainability offers a solution by granting complete visibility into the AI’s decision-making process, allowing its operators to trace the logic from input to output and identify the source of any misleading or inaccurate output. Achieving explainability requires robust data governance, restricting the assistant’s response source to thoroughly vetted internal resources like FAQ pages and data sets, preventing potential biases and inaccuracies.

Control

‘Untamed’ LLMs pose risks in healthcare. Their unrestricted online data harvesting can be counterproductive in this sensitive domain. Large datasets increase the risk of AI ingesting both relevant and irrelevant information, leading to “hallucinations”: factually incorrect and contextually illogical outputs despite their apparent coherence. These errors can have severe consequences, ranging from misdiagnosis and incorrect prescriptions to reputational damage to healthcare systems.

However, striking a balance is crucial. While tight controls are needed on generative LLMs, complete determinism isn’t the answer. Their creative potential is valuable, but unpredictable outputs pose risks. Striking a middle ground where creativity is harnessed responsibly is essential for safe and effective healthcare AI.

Compliance

Navigating the regulatory maze of AI in healthcare requires agility, not just one-time compliance. Hitting the mark with HIPAA, GDPR, AI Act, and Executive Orders today isn’t enough; organizations must adapt continuously to a shifting landscape.

Future-proof your generative AI tools: Design them with flexibility in mind to seamlessly adapt to new regulations. Ditch rigid architectures for malleable ones that bend but don’t break.

Data privacy is paramount. Implement robust safeguards to ensure complete redaction of PHI and PII, preventing exposure to third parties. Transparency is key – build explainable systems that allow healthcare providers to audit the data sources behind an AI assistant’s output. Utilize traceable and internal data sources known for their trustworthiness and reliability.

Healthcare in the Not-Too-Distant Future

The integration of Generative AI (Gen AI) has the potential to significantly impact healthcare by driving innovation, streamlining processes, and enhancing patient care. Healthcare organizations are already exploring AI-powered tools to personalize patient experiences, optimize costs, and improve operational efficiency while aiming to boost patient outcomes.

However, responsible implementation of Gen AI is crucial to realize its full potential. Considerations like explainability, control, and compliance are essential to ensure the safety and effectiveness of these technologies in the sensitive healthcare environment. Only through responsible development and ethical application can Gen AI truly contribute to a brighter future for healthcare.