There is no denying it, in the future, AI will be woven into the fabric of society with the potential to transform lives, companies, and government, therefore it’s vitally important for us to make sure we consider the ethics and risks of AI.

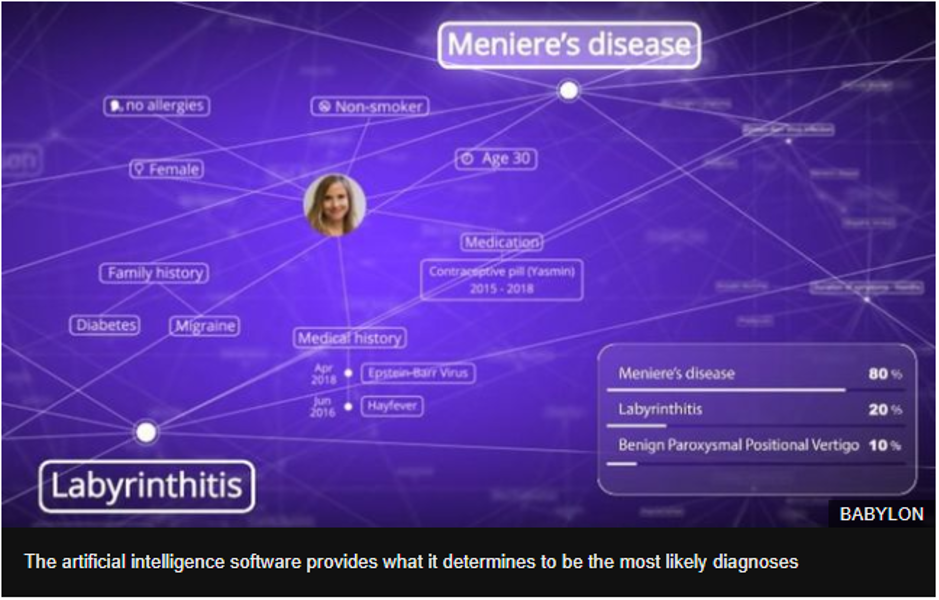

To date, it has enabled humanity to predict outbreaks of diseases earlier and more accurately; improve crop yields in the developing world with predictive plant diseases diagnosis; and even had an impact on corporate performance by doing things such as becoming integrated with HR recommending the ‘best’ candidate for the role – and supporting internal processes such as revenue forecasting.

Considering the ethics and risks of AI in their regulations

For this reason, it’s not surprising that 62% of CEOs believe that AI will have a larger impact than the internet did on our day to day lives. We are already seeing organisations potentially becoming subject to ethical and legal obligations that relate to the way they chose to use AI.

With an estimated value of $4 trillion to be reached by AI-driven business by 2022, AI-specific regulations are inevitable.

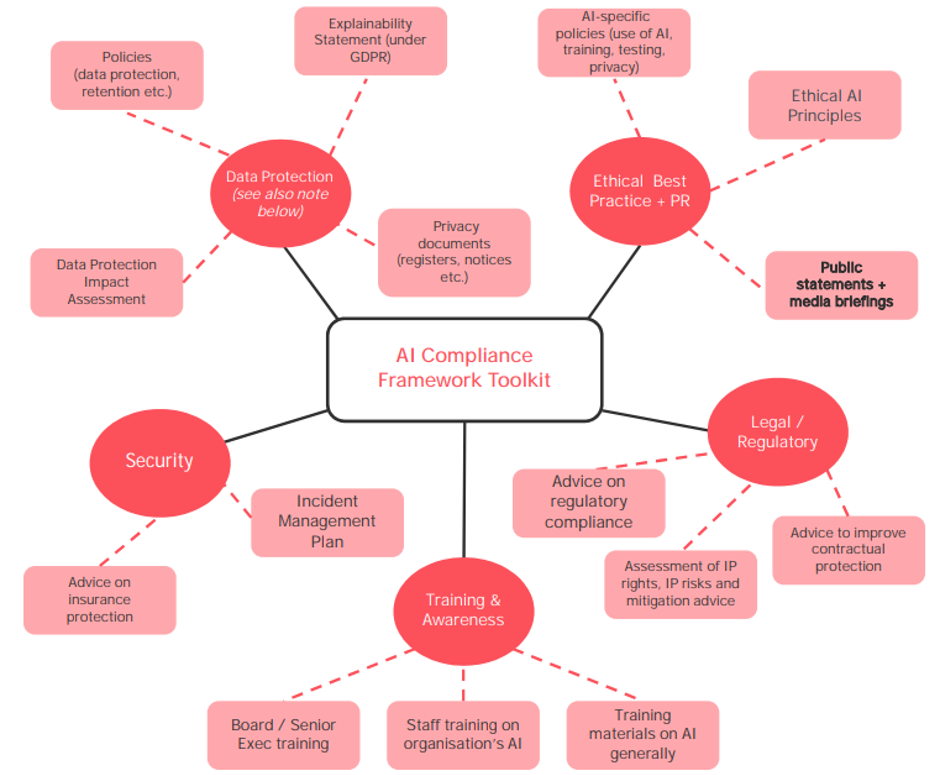

Law firms, such as Simmons & Simmons have answered the call by providing companies with an AI compliance framework that supports the deployment of AI, helping them to adhere to, and implement, ethical compliance.

Following a gap analysis, the below toolkit is specifically designed to help each firm to address the potential legal, ethical, and compliance red flags that are associated with the use of AI.

Given the current state of the AI industry, it is recommended to keep certain existing frameworks in place.

The ethical dilemma we face with AI

Robert Duncan back in 1976 stated that AI should be transparent yet explainable, flexible yet efficient, aligned yet adaptable, and radically innovative yet in incremental ways.

How can we achieve such harmony?

Many have tried to answer this question, including Christine Fox, who outlined 3 phases that could influence regulating and deciding about the Ai and autonomous tech’s reach and impact which you can see in the video below.

In her TED talk, Christine highlights the necessity for a human to control the tech – but what happens when humans enable the tech to control them?

The field of AI ethics has largely emerged to answer this very question.

The range of individual and societal harms that AI systems may cause include: bias and discrimination (as presented in the New York Times article referred to below); difficult to understand or in fact unjustifiable outcomes; invasion of privacy; isolation and desegregation; and last but not least – unreliable, unsafe, and poor-quality outcomes.

To our disadvantage, there is often a trade-off between interpretability and accuracy of explainability that comes with using AI.

And yes, one may argue that AI’s failure is as much about human motivations as technology is.

However, with the creation of something ‘new’, shouldn’t we aim at something better?

Do we want to create a human replica, or do we want the technology to overcome human error, eliminate the cognitive bias and provide us with a reliable and steady source of information (if not decision making)?

Who decides the right or wrong ethics and risks of AI?

There’s been a lot of distraction from science and technology and focus on ethics as everyone wants to have a say and everyone thinks they know the answer. But who decides what’s right and what’s wrong?

Juan Enriquez attempts to answer these questions in his TED Talk:

Regardless of all the technological advantages, we’re experiencing, we still need patience and humility to address and provide frameworks for AI.

AI guidelines

One of the options would be for the government to develop AI guidelines that could potentially include:

- Empowerment – AI should be available to everyone (as promoted by Microsoft);

- Algorithmic accountability that is accountable to people;

- Building trust in technology by being transparent and explainable, and

- Aim at augmenting human capability (rather than re-enforce human errors and bias)

AI is increasingly having a greater impact on our workforce and it is crucial for the employers to start thinking about the protective measures, understand and test these AI systems in their specific environment, as well as find its fit for purpose.

The conflict between automation and autonomy might not be easy to solve instantly.

That said, it’s an exciting space that needs to be regulated so that its use can grow whilst providing the right frameworks to promote a secure and open environment for this growth.

So far, the only attempt that has been put in place to regulate AI is the Automatic and Electric Vehicles Act 2018, that states:

- s.2(1) Where— (a) an accident is caused by an automated vehicle when driving itself… (b) the vehicle is insured at the time of the accident, and (c) an insured person or any other person suffers damage as a result of the accident, the insurer is liable for that damage…

- s.5(1) Where— (a) section 2 imposes on an insurer, or the owner of a vehicle, liability to a person who has suffered damage as a result of an accident … any other person liable to the injured party in respect of the accident is under the same liability to the insurer or vehicle owner.

What the regulator did there, however, is an extended responsibility to the insurer, leaving the power of decision, accountability, and liability to the insurer to solve.

Clearly this is just the beginning and much more attention must be paid by governing bodies to regulate AI and put compliance frameworks in place. We need to pay close attention to the ethics and risks of AI, not just the benefits. Only by defining and assigning responsibilities can firms feel safe about introducing AI and not lose sleep (and money) over potential lawsuits.