ChatGPT has become the most widely adopted consumer-based application in history since its introduction late last year. Within just two months, over 100 million people were actively using the application by January 2023. ChatGPT became extremely popular because it had a winning formula:

· Easy to use interface (a text prompt)

· Easy to access (any computer with a web browser)

· Human-like and intelligent responses on a wide variety of topics

For organizations developing Acceptable Use Policies (AUP) for Generative AI applications like ChatGPT, it is important to first assess possible risks and limits associated with this technology. Some of the major risks and limitations include:

· Accuracy: The generated information may be inaccurate or false

· Bias: If training data from the internet is used, the responses can retain the biases present online

· Hallucination: The large language model (LLM) may generate responses that are fabricated and not based on observation

· Security: Submitted prompts are often used to train the AI model and can be easily retrieved and accessed by anybody who submits the appropriate prompt.

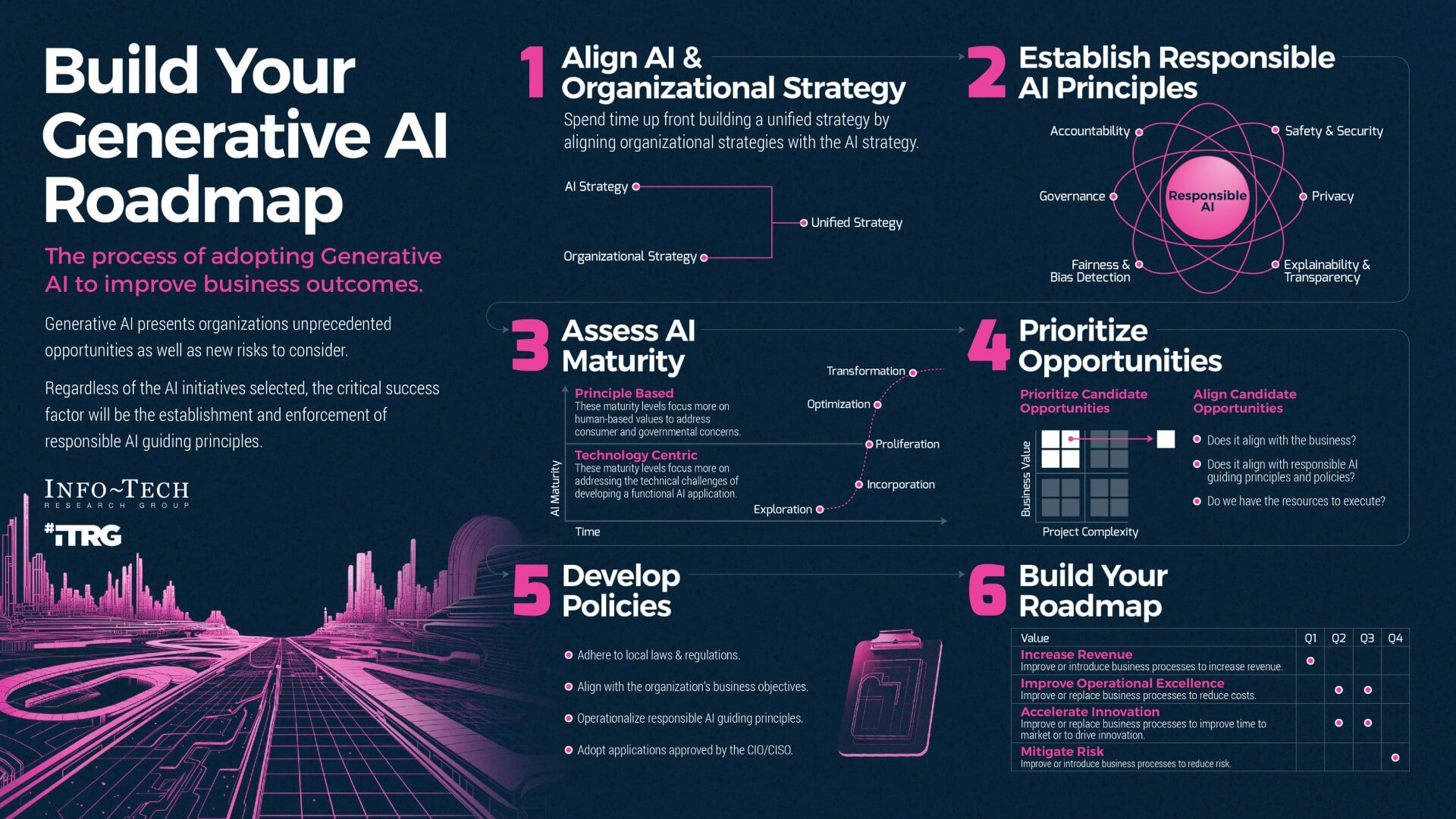

The Role of Responsible AI Guiding Principles

When developing policies for the use of Generative AI, a best practice is to first establish a set of “Responsible AI Guiding Principles.” These principles provide a framework for the responsible and safe development or acquisition of any AI application. Here are fundamental principles to consider:

Today, there is a wide spectrum regarding the policies of use for ChatGPT or similar Large Language Models. Policies of use should reflect and support the organization’s missions and objectives.

Company Policies for ChatGPT – Examples

Companies often restrict or forbid the use of ChatGPT for several reasons, including:

· Data privacy concerns, such as protecting proprietary information, customer data, or third-party data

· Concerns regarding the accuracy of the responses

· Concerns about potential copyright infringement with the generated responses

Companies at the far left side of the above spectrum have communicated to their employees that ChatGPT cannot be used internally, with some also preventing access to the application from their corporate systems, often citing the need for data privacy. For example, Samsung initially prohibited the use of ChatGPT but later allowed employees to use it with the condition of not using sensitive company data. However, security breaches occurred within a few weeks, leading to a reinstatement of the prohibition.

CNET is one example of how not to use technologies such as ChatGPT or other LMMs for selective use cases. The company attempted to replace reporters and use the technology to write articles on financial planning. However, numerous complaints about errors in the published articles forced CNET to retract them and apologize to their readers.

As we continue moving to the right of the spectrum, these companies are adopting broader use cases for Generative AI, with the first category having policies that advise against using confidential, customer, or third-party data in prompts. Finally, the “All In” category includes companies that have implemented ChatGPT in a manner that is exclusive to their employees, safeguarding their data and prompts so they are not shared with the cloud-based Large Language Model.

Policy Examples

Here are examples of company policies that can be communicated to employees regarding the use of Generative AI:

Company Policy Regarding the Use of Generative AI

While generative AI may be leveraged in your internet searches, there are NO circumstances under which you are permitted to reproduce generative AI (text, image, audio, video, etc.) output in your content.

Company Policies Allowing the use of Generative AI (with conditions)

If you use generative AI software such as ChatGPT, you need to assess the accuracy of its response before including it in our content. Assessment includes verifying the information, seeing if bias exists, and judging its relevance.

Employees must not:

· Provide any employee, citizen, or third-party content to any generative AI tool (public or private) without the express written permission of the CIO or the Chief Information Security Officer. Generative AI tools often use input data to train their model, therefore potentially exposing confidential data, violating contract terms and/or privacy legislation, and placing the organization at risk of litigation or causing damage to our organization.

· Engage in any activity that violates any applicable law, regulation, or industry standard.

· Use services for illegal, harmful, or offensive purposes.

· Create or share content that is deceptive, fraudulent, or misleading, or that could damage the reputation of our organization.

· Use services to gain unauthorized access to computer systems, networks, or data.

· Attempt to interfere with, bypass controls of, or disrupt operations, security, or functionality of systems, networks, or data.

Summary

Companies all over the world are assessing how best to leverage the potential benefits of Generative AI and, at the same time, manage the new risks associated with this technology. The best practice is to align the AI strategy with the organizational strategy and establish “Responsible AI Guiding Principles” before adopting generative AI applications. Education also plays an essential role in the successful adoption of policies by making key stakeholders of the organization, users, and developers aware of the possible risks and responsible use of this technology. Education becomes even more critical when the tools to enforce policies and guiding principles are still being developed.