Last week signaled the opening of legal proceedings by copyright owners against companies that use their copyrighted work to train AI content generation tools. Privacy law, like copyright, protects private and sensitive types of data from unfair use, and civil rights advocates will watch developments in these proceedings carefully.

Stock content giant, Getty Images, announced legal proceedings against London-based Stability AI, the business responsible for the popular Artificial Intelligence art generator, Stable Diffusion.

Getty claims the company has infringed its property rights by using millions of copyrighted images and associated metadata without permission to train its algorithms. Stability AI CEO, Emad Mostaque, rebuked the claims, saying that the images “are ethically, morally and legally sourced and used.” A spokesperson for the company even went so far as to say that “anyone that believes that this isn’t fair use does not understand the technology and misunderstands the law.”

Creative AI is booming – Stable Diffusion, OpenAI’s ChatGPT and DALL⋅E 2, and Google’s LaMDA are just a few of the bots finding fame for their ability to both amaze and unsettle users by drawing on the database of human knowledge to create nuanced, intricate and creative outputs.

The Getty v. Stability AI lawsuit is not a singular event. On the same day that Getty announced legal action, three artists also filed to sue Stability AI, alongside text-to-image creator Midjourney and DeviantArt, for violating their rights. “We’ve filed a lawsuit challenging Stable Diffusion, a 21st-century collage tool that violates the rights of artists. Because AI needs to be fair & ethical for everyone”, the statement reads.

We’re now seeing a spotlight on the complex, emotional and potentially costly issue of data ownership that’s growing with the popularity of AI tools.

But is it also a warning sign for the control and protection of personal and private data? If someone’s painting, photograph, voice or other data is available online, what should the consequences be of manipulating the data to become something new, untrue and yet still realistic?

These tools greatly exacerbate the risks of malicious actors and deepfakes. Take the shockingly convincing, AI-generated, 20-minute conversation between Joe Rogan and Steve Jobs, 11 years after his death. Or the ease with which anyone can manipulate an image using DALL⋅E – what previously needed strong skills in image editing tools can now be done with just a simple natural-language query.

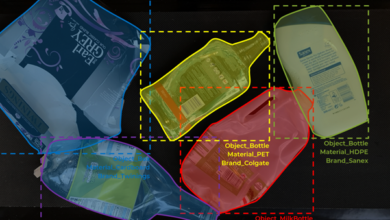

Work has already gone into protecting images against manipulation from AI image creators. Researchers at the Massachusetts Institute of Technology (MIT) have worked out how to disturb pixels in an image to make an ‘invisible noise’ that throws off AI image generators and makes the end result unrealistic.

This solution works, for now, but it’s hard not to assume AI tools will find a workaround in a game of cat and mouse as these tools continue to develop with deep learning; and the question of their coexistence with human culture will only get bigger.

AI models learn from massive databases – usually scraped from the internet – and personally identifiable and sensitive data within these must be protected. However, regulation in technology often comes after the developments themselves, when privacy and security concerns come to a head. With the current rise in AI-centred lawsuits, policymakers may have their latest data conundrum to address.

Beyond GDPR and other data protection regulations, you also need technology to keep data secure. Alongside the kind of work that is happening at MIT, technology that anonymises and redacts private data will help protect and limit the extent to which malicious actors can use it with unethical intentions.

There are fundamental challenges around privacy and ethics, and how this plays out in terms of data privacy regulation will be both fascinating and complex in equal measure.

For now, lawyers, technologists, policymakers and compliance personnel are closely watching the developing legal battles against AI tool creators, as this issue of credit, compensation, and consent – and ultimately, privacy and freedoms unfold.

It is hard to overstate the importance of what’s at stake here. Just as Artificial Intellligence reshapes our attitudes to culture and creativity, it also has the power to alter our relationships to each other, and even to our own sense of self. It’s one of the central issues of our time, and the debate must be held out in the open.